[PYTHON] [Translation] scikit-learn 0.18 User Guide 3.5. Verification curve: Plot the score to evaluate the model

Google translated http://scikit-learn.org/0.18/modules/learning_curve.html [scikit-learn 0.18 User Guide 3. Model Selection and Evaluation](http://qiita.com/nazoking@github/items/267f2371757516f8c168#3-%E3%83%A2%E3%83%87%E3%83] From% AB% E3% 81% AE% E9% 81% B8% E6% 8A% 9E% E3% 81% A8% E8% A9% 95% E4% BE% A1)

3.5. Validation curve: Plot the score to evaluate the model

All estimates have their strengths and weaknesses. Its generalization error can be decomposed in terms of bias, variance and noise. The estimator ** bias ** is the mean error of different training sets. The ** variance ** of the estimator indicates how sensitive it is to the changing training set. Noise is a characteristic of data.

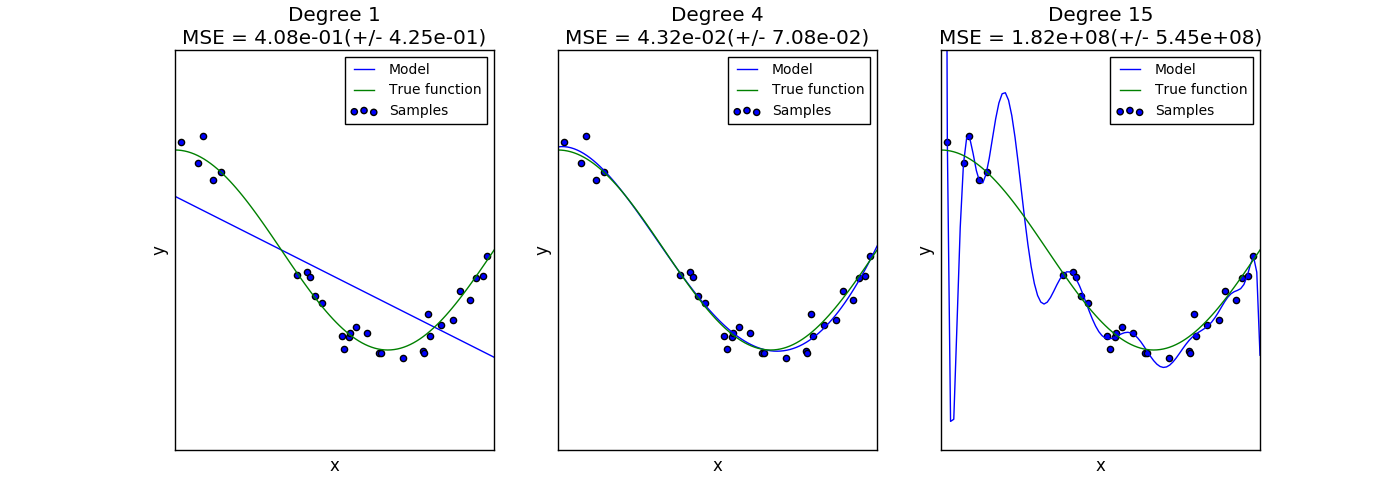

The following plot shows the function $ f (x) = \ cos (\ frac {3} {2} \ pi x) $ and a noisy sample from that function. We use three different merit functions to fit that function: Linear regression with first-order, fourth-order, and fifteenth-order polynomial features. The first estimator is so simple that it may not fit the sample and the actual function very well (high bias). The second estimator approximates it almost perfectly. The last estimator fits the training data perfectly, but does not fit the true function very well. That is, it is very sensitive to changes in training data (high variance).

Bias and variance are inherent properties of estimates, and you usually need to choose a training algorithm and hyperparameters so that both bias and variance are as small as possible ([Bias Deviation Dilemma](https: //). en.wikipedia.org/wiki/Bias-variance_dilemma)). Another way to reduce model variance is to use more training data. However, if the actual function is too complex to fit with a small variance estimator, more training data needs to be collected. With a simple one-dimensional problem like the one we saw in this example, it's easy to see if the estimator suffers from bias or variance. However, in higher dimensional spaces, it can be very difficult to visualize the model. For this reason, it may be useful to use the tools below.

3.5.1. Verification curve

To validate the model, you need a scoring function (see Model Evaluation: Quantify the Quality of Predictions (http://qiita.com/nazoking@github/items/958426da6448d74279c7)). For example, the accuracy of the classifier. See Grid Exploration or a similar method (see Adjust Estimator Hyperparameters (http://qiita.com/nazoking@github/items/09a4c63614797a6bd705) for a good way to select multiple estimator hyperparameters ) And select the hyperparameter with the highest score in the validation set or multiple validation sets. Note that if you optimize your hyperparameters based on your validation score, your validation score will be biased and no longer a good estimate for generalization. To get a good assessment of the generalization, you need to calculate the score in a separate test set. However, it can be useful to plot the effects of one hyperparameter on training and validation scores to see if the estimates fit too well or are inadequate for some hyperparameter values. In this case, using the function validation_curve:

>>> import numpy as np

>>> from sklearn.model_selection import validation_curve

>>> from sklearn.datasets import load_iris

>>> from sklearn.linear_model import Ridge

>>> np.random.seed(0)

>>> iris = load_iris()

>>> X, y = iris.data, iris.target

>>> indices = np.arange(y.shape[0])

>>> np.random.shuffle(indices)

>>> X, y = X[indices], y[indices]

>>> train_scores, valid_scores = validation_curve(Ridge(), X, y, "alpha",

... np.logspace(-7, 3, 3))

>>> train_scores

array([[ 0.94..., 0.92..., 0.92...],

[ 0.94..., 0.92..., 0.92...],

[ 0.47..., 0.45..., 0.42...]])

>>> valid_scores

array([[ 0.90..., 0.92..., 0.94...],

[ 0.90..., 0.92..., 0.94...],

[ 0.44..., 0.39..., 0.45...]])

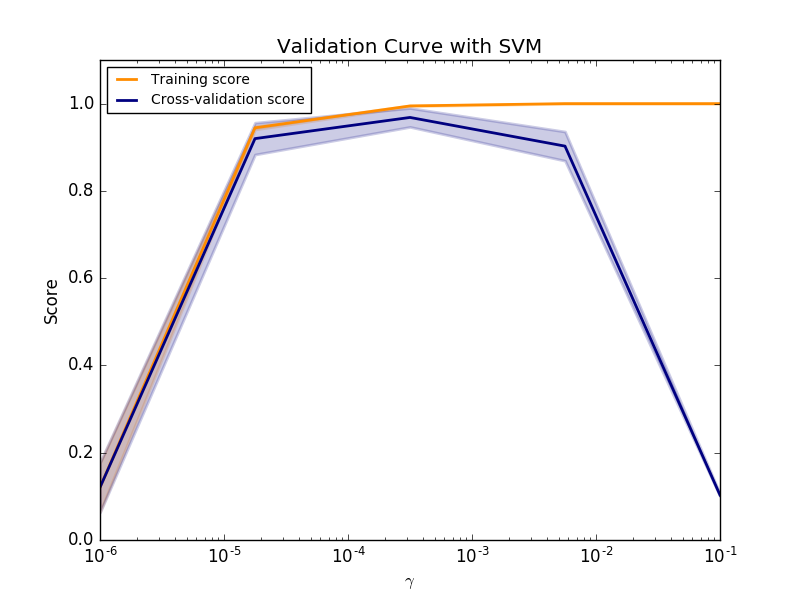

If both the training score and the validation score are low, the estimator is underfit. If the training score is high and the validation score is low, the estimator is overfitting, otherwise it is working very well. There are usually no low training scores and high validation scores. All three cases can be found in the plot below, which modifies the SVM parameter $ \ gamma $ in the digits dataset.

3.5.2. Learning curve

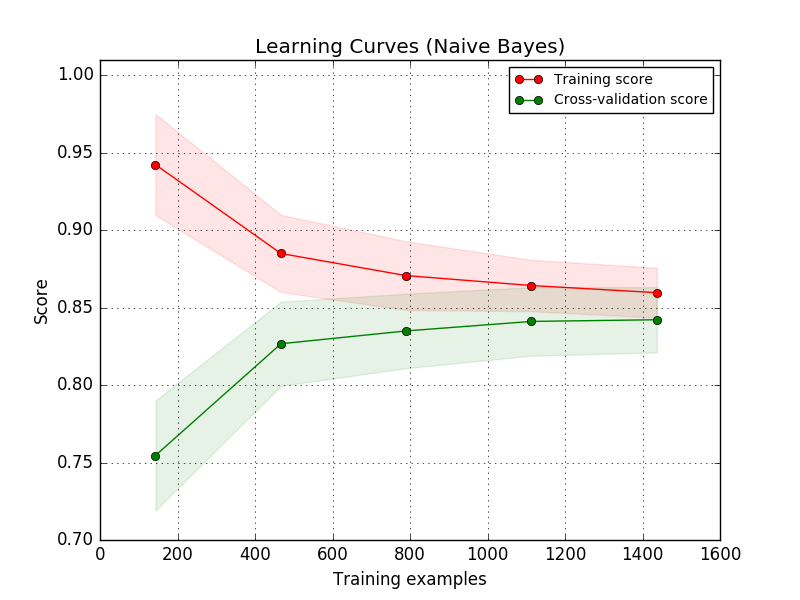

The learning curve shows estimator validation and training scores for different numbers of training samples. This is a tool to see how much you can benefit from adding training data and whether your estimator is further suffering from variance or bias errors. If both the validation score and the training score converge to values that are too low as the size of the training set increases, then increasing the training data does not benefit. You can see an example in the plot below. Native Bayes generally converge to a low score.

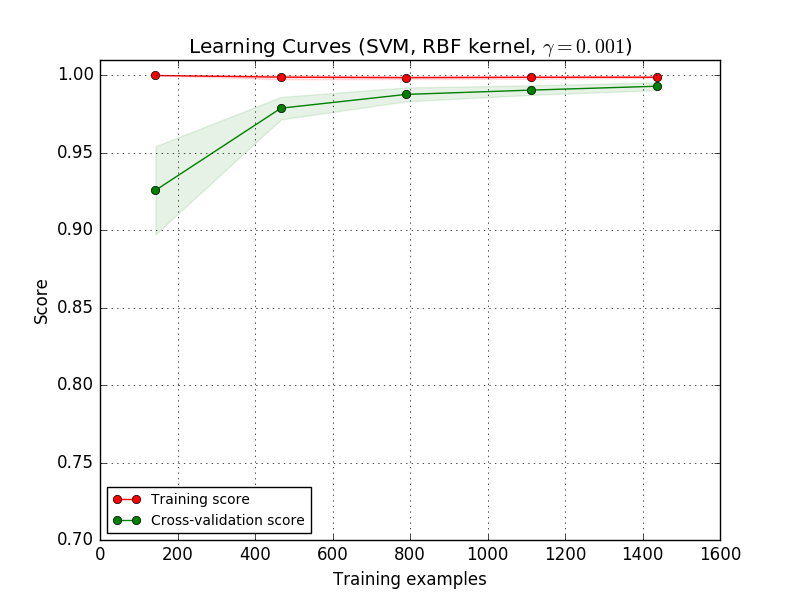

You will probably have to use the parameterization of an estimator or current estimator that allows you to learn more complex concepts (ie, less biased). If the training score is much higher than the validation score for the maximum number of training samples, adding training samples is most likely to increase generalization. In the plot below, you can see that the SVM benefits from more training examples.

Using the learning_curve function, such a learning curve (sample used) You can generate the values needed to plot the number of, the average score of the training set, and the average score of the validation set.

>>> from sklearn.model_selection import learning_curve

>>> from sklearn.svm import SVC

>>> train_sizes, train_scores, valid_scores = learning_curve(

... SVC(kernel='linear'), X, y, train_sizes=[50, 80, 110], cv=5)

>>> train_sizes

array([ 50, 80, 110])

>>> train_scores

array([[ 0.98..., 0.98 , 0.98..., 0.98..., 0.98...],

[ 0.98..., 1. , 0.98..., 0.98..., 0.98...],

[ 0.98..., 1. , 0.98..., 0.98..., 0.99...]])

>>> valid_scores

array([[ 1. , 0.93..., 1. , 1. , 0.96...],

[ 1. , 0.96..., 1. , 1. , 0.96...],

[ 1. , 0.96..., 1. , 1. , 0.96...]])

[scikit-learn 0.18 User Guide 3. Model Selection and Evaluation](http://qiita.com/nazoking@github/items/267f2371757516f8c168#3-%E3%83%A2%E3%83%87%E3%83] From% AB% E3% 81% AE% E9% 81% B8% E6% 8A% 9E% E3% 81% A8% E8% A9% 95% E4% BE% A1)

© 2010 --2016, scikit-learn developers (BSD license).