Convert images passed to Jason Statham-like in Python to ASCII art

I made an AA automatic creation program.

If you pass an image with any of keyword, ʻURL, and file path`, it will be automatically converted to AA.

Criminal action machine

Hello. This is a boring article bot for many years. The other day, my notice like Shizukanaru Don was unusually orange. Surprisingly, it seems that ** Jason Statham's enthusiastic enthusiast ** has followed us. I've seen several times that you're writing a wonderful article filled with love and courage, but in this article, the universe No. 1 Idol ** Nikonii ** was in the screenshot, so I'll write an article about Jason Statham too! I made a point of thinking. ~~ (mystery) ~~ ** [This Tag](https://qiita.com/tags/%e3%82%b8%e3%82%a7%e3%82%a4%e3%82%bd%e3%83%b3%e3% 83% bb% e3% 82% b9% e3% 83% 86% e3% 82% a4% e3% 82% b5% e3% 83% a0), I want you to be popular! ** **

Digression about Jason

That being said, I like him so much that every time I watch Jason's movie, I do muscle training for only three days.

I like all of them, but my favorite is Jason Statham's wasteful spending (?) SPY, right?

It's nice to be cool like a mechanic, but take it off like adrenaline! muscle! explosion! chest hair! I like to be stupid like Fu ● k off !!, personally.

Even if it's not the protagonist like The Italian Job or Snatch, it has a strong presence, and if anything, it's the coolest, the work is tightened and loosened, and I think he's a really amazing person.

I'm lonely because there aren't many people who can understand the story of Western movies in my age.

By the way, I recommend Brad Pitt.

It's solid, but it's a super good work, so please come to `Fight Club`.

I'm sorry to pollute the tag with such a bad article.

But ** [this tag](https://qiita.com/tags/%e3%82%b8%e3%82%a7%e3%82%a4%e3%82%bd%e3%83%b3% e3% 83% bb% e3% 82% b9% e3% 83% 86% e3% 82% a4% e3% 82% b5% e3% 83% a0), I want you to be popular! **(Second time)

Overview

You can find articles that automatically create ʻAAwithPython. While referring to that area, we will implement it with Pillow and Numpy`.

Reference part 1 ・ Reference part 2

What is AA ʻAA` is an abbreviation for ʻASCII Art`, which is a picture drawn using only letters. Originally it was created in an era when images could not be exchanged on the Internet as it is now, but even now the address is an IP address and the occupation is familiar to us as security guards, and if a great person makes it  In this way, the outline is captured well and it is completed as a line art. I thought that I might have to do my best with Python, but I didn't have that much time in my ronin, so this time I will follow the existing ones and work on a simple ʻAA` called a shade system. In the first place, my experience value is closer to the Web, and `Python` doesn't understand 3px of the first stroke of` P`, so even the shade system was difficult ... [Paper on line art style AA (!?)](Https://www.jstage.jst.go.jp/article/iieej/35/5/35_5_435/_pdf)

This time I will draw a shade system AA.

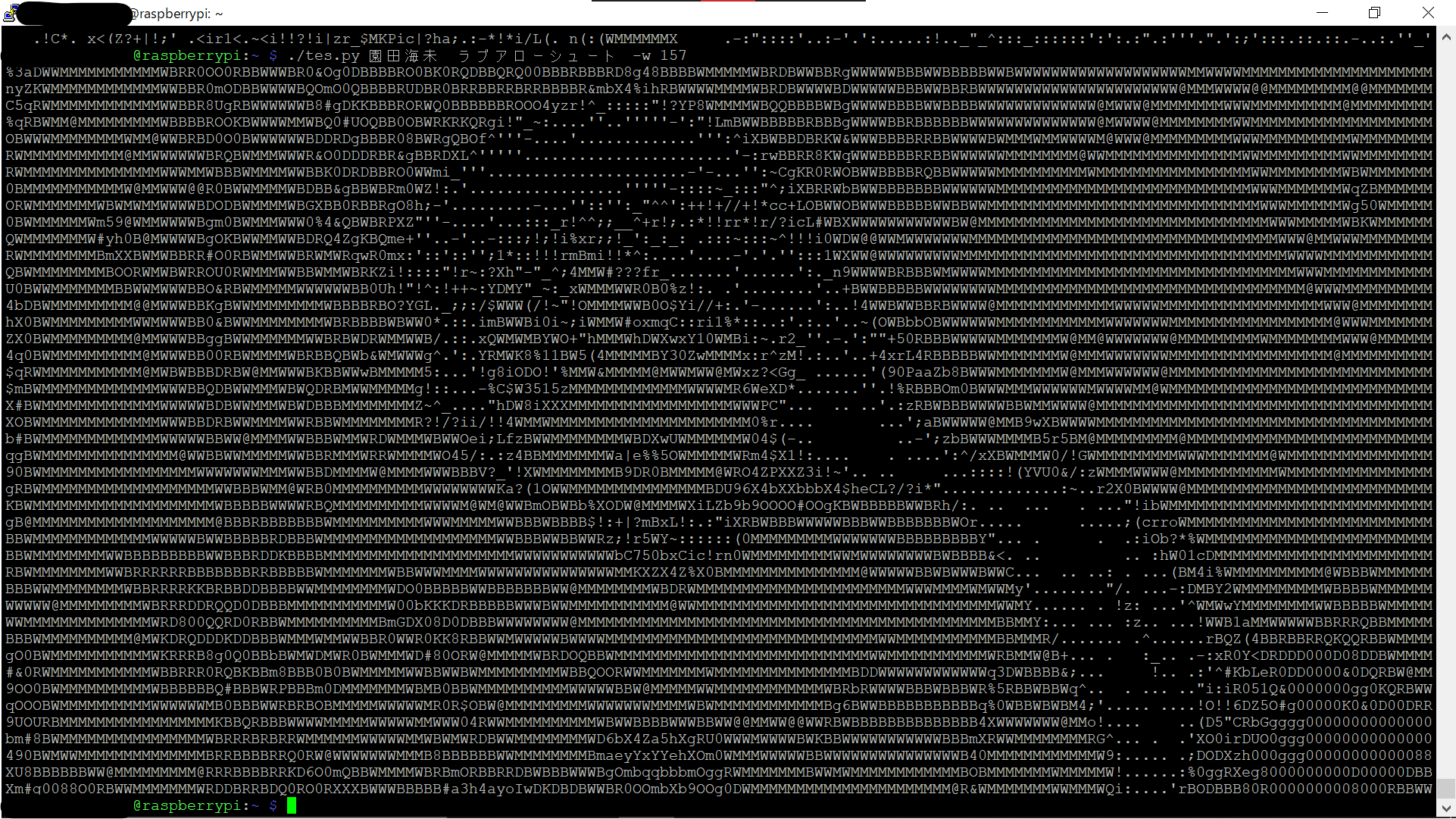

By simply arranging the characters that are closest to the brightness of each pixel, you can finally create something like this.

The width is 80 characters, which is just the size of a small window terminal, but you can freely adjust it to make it larger.

If you make it larger, the resolution will inevitably increase, and the finish will be beautiful.

how is it?

If you look at this when you're tired and stop, can't you hear that handsome voice?

What the f●ck are you doing? Get back to work or I will beat the shit out of you!

** (I don't know about compliance because it's a voice that is played back in my brain) **

100 times more energetic, work crunchy, company livestock-chan.

This time is such a project.

how is it?

If you look at this when you're tired and stop, can't you hear that handsome voice?

What the f●ck are you doing? Get back to work or I will beat the shit out of you!

** (I don't know about compliance because it's a voice that is played back in my brain) **

100 times more energetic, work crunchy, company livestock-chan.

This time is such a project.

Anyway, it's cool to see it in AA.

environment

$ uname -a

Linux raspberrypi 5.4.51-v7l+ #1333 SMP Mon Aug 10 16:51:40 BST 2020 armv7l GNU/Linux

$ python -V

Python 3.7.3

$ pip list

Package Version

------------------- ---------

beautifulsoup4 4.9.2

numpy 1.19.2

Pillow 7.2.0

pip 20.2.3

requests 2.24.0

Feature

As mentioned above, there is no precedent if only the automatic creation of shading AA is done, so I made some modifications. I can say it myself, but I think it has become easier to use.

- AA is returned regardless of whether you pass the

keyword, ʻURL, orlocal file path`. - In relation to the above, I incorporated ** Google Image Search ** to get images automatically.

- ** Character assignment algorithm ** (?) Has been improved to improve reproducibility.

Where to use? With CUI, when I want to check the image quickly, I feel that it is inconvenient because it takes time and effort to transfer it by `FTP one by one. With this program, it is convenient because you can display images in AA and you may be able to get an overview. (Rogue) Personally, I don't allow Linux other than CUI, so I'm glad that AA blooms on the black and white screen of Linux with a single command. For example, if you give him "Umi Sonoda`", Umi will smile.  Are you happy? ?? I am happy.

Character assignment algorithm gymnastics

We will assign the characters to be used according to the pixel brightness. The characters used this time are only the original ASCII characters for compatibility improvement.

- Calculate the ratio of pixels of each character to a certain area

- Normalize the character ratio and convert it to 256 steps from 0 to 255, and use this as a map.

- Compare each pixel of the image you want to convert with the map and select the appropriate text

jason2aa.py

from PIL import Image, ImageDraw, ImageFont #Loading Pillow

import numpy as np #Load numpy

width = 80 #Width (number of characters)

font = ImageFont.truetype('DejaVuSansMono.ttf', 16) #Font used for map making (Raspberry Pi default)

characters = list('!"#$%&\'(*+,-./0123456789:;<=?@ABCDEFGHIJKLMNOPQRSTUVWXYZ[^_`abcdefghijklmnopqrstuvwxyz{|~ ') #Character string to use (ASCII character string)

img_gray = 'hogehoge.png' #Image to convert

#Normalization function (0 in the list of numbers passed-Normalizes to 255 and returns)

def normalize(l):

l_min = min(l) #Minimum value in the list

l_max = max(l) #Maximum value in the list

return [(i - l_min) / (l_max - l_min) * 255 for i in l] #Normalize each item

#Density calculation function (list of passed characters{256-step density:letter}Return as a dictionary array of

def calc_density():

l = [] #Declare an empty array to put the density

for i in characters: #Repeat for all characters

im = Image.new('L', (24,24), 'black') #24px in grayscale*24px black monochromatic image creation

draw = ImageDraw.Draw(im) #Turn the created image into an ImageDrow object

draw.text((0,0), i, fill='white', font=font) #Draw text in white on the image

l.append(np.array(im).mean()) #Add brightness average of image with text drawn to array

normed = normalize(l) #Normalize the array containing the brightness of each character to 256 levels

dict = {key: val for key, val in zip(normed, characters)} # {Luminance:letter}Make a dictionary array of

return sorted(dict.items(), key=lambda x:x[0]) #Sorts the dictionary array by brightness and returns

maps = calc_density() #Get a dictionary array for the brightness of each character

density_map = np.array([i[0] for i in maps]) #Take out only the brightness and put it in a numpy array

charcter_map = np.array([i[1] for i in maps]) #Extract only characters and put them in a numpy array

imarray = np.array(img_gray) #Pixel brightness of image in numpy array

index = np.searchsorted(density_map, imarray) #Find the character with the brightness closest to the pixel brightness and index it into a numpy array

aa = charcter_map[index] #Fancy index the numpy array of characters in the numpy array above

It's almost great this article, but it's a round pakuri (sorry), but the behavior when assigning characters has been improved.

The operation is a little slower, but you can use the numpy.searchsorted function to express the shade more accurately.

As a result, it becomes easier to capture contours when converting images.

If you pass the reference array as the first argument and the target as the second argument, the numpy.searchsorted function will return the index of the element closest to the target from the reference array. [^ 1]

Since the reference array needs to be sorted and the index is returned, peripheral processing such as map creation is forcibly implemented using the dictionary array.

After converting two arrays to dictionary type and sorting by key (brightness), brightness and characters It is stored again in two arrays, brightness and letters.

If you use it like an application, it is better to abolish this function and fix the map for lighter processing.

If you use numpy to arrange the brightness of each pixel of the image and pass it to the numpy.searchsorted function, you will get an array ** that stores the indexes of characters with similar brightness. To.

If you write this out by any method, AA will be completed.

Image search on Google → Get

I will throw a keyword to Google teacher and pull the image by scraping.

Since it will be AA anyway, the image quality is sufficient with thumbnail quality, so it is easy to implement.

By the way, you can pass q = keyword1 + keyword2 as a search keyword to Google teacher and tbm = isch as an image search specification.

- Get the HTML of the entire Google search results with

Requests - Pick up the

<img>tag (image) - Get the

srcattribute value (image URL) - Download the image from the acquired image link

- Save the downloaded image to a temporary file

jason2aa.py

from bs4 import BeautifulSoup #Library to mess with html nodes

import requests #Controls http communication"Suitable for humans"Library

import tempfile #Library for managing temporary files

import re #Regular expression library

import sys #A library that can operate higher than Python

#Image search / acquisition function (returns the passed URL as an image)

def get_image(destination):

try: #Since it is not completed locally, exception handling is built in assuming an error

html = requests.get(destination).text #Send an http request and convert the response to text format

soup = BeautifulSoup(html,'lxml') #Specify excellent lxml for parser (if error occurs, use xml)

links = soup.find_all('img') #html tag<img>Find out and extract all

for i in range(10): #Repeat up to 10 times as you may not be able to pick up the image

link = links[random.randrange(len(links))].get('src') #Randomly<img>Of the tag"src"Extract one

if re.match('https?://[\w/:%#\$&\?\(\)~\.=\+\-]+', link): #Confirm that the extracted character string is a URL

image = requests.get(link) #Download the extracted link image

image.raise_for_status() #Detect request error (such as 404)

break #I got it normally, so I'm going through the loop

elif i >= 9: #I repeated 10 times, so I got out of the loop

raise Exception('Oh dear. Couldn\'t find any image.\nWhy don\'t you try with my name?') #Throw an error to the top exception and a custom error

except Exception as e: #Catch the error

print('Error: ', e) #Standard error message

sys.exit(1) #Exit with 1 (abnormal)

fd, temp_path = tempfile.mkstemp() #Create a temporary file

with open(temp_path, 'wb') as f: #Open temporary file

f.write(image.content) #Write an image to a temporary file

return temp_path #Returns a temporary file

url = 'https://www.google.com/search?q=Jason+Statham&tbm=isch&safe=off&num=100&pws=0' #Search for Jason Statham's image with Google teacher

file_path = get_image(url) #Store the path of the acquired image in a variable

You can scrape very smoothly. When I implemented the same in PHP, it took some time, so I found it convenient.

Note that the requests library is an HTTP level implementation, so even if the response when you executerequests.get ()is 404 Not found, no error will occur.

However, it is an error in terms of scraping, so you need to separate the cases by yourself.

Fortunately, all status codes other than the 200s such as 404 can be regarded as abnormal, so if you apply a convenient function calledraise_for_status ()to the response, an error will be raised when the status code is not in the 200s.

You can pick up that error with ʻexcept` and classify it by number.

As it is

I played around with it so that it could be used like an app.

Help is displayed by typing jason2aa.py -h.

Okey, here is the fuckin' help.

Usase: jason2aa.py keyword keyword .. [option]

[Options]

-w Width of AA (The number of characters)

-p Path to image file

-b Return Black and white reversed image

-h Show this help (or -help)

If I was you, I'm sure I can handle such a bullshit app without the help.

Because, I'm a true man.

I'm talking about Jason Statham-like, so I asked Jason Statham in my brain to help me with a message so that I wouldn't be "fooled".

Please try to spit out various errors.

For example, if you pass a screen width that is not ʻint () `

I will return it like this lol

If no search word or pass is passed, of course Jason Statham's image will be returned.

I will return it like this lol

If no search word or pass is passed, of course Jason Statham's image will be returned.

The width is set to be the full width of the terminal window size as standard.

It is implemented in ʻos.get_terminal_size (). Columns by referring to [this article](https://www.lifewithpython.com/2017/10/python-terminal-size.html). I wanted to reduce the number of libraries to load, so I implemented it with ʻos.

The passed path is processed so as not to cause an error as much as possible, but please let me know if an error occurs.

We also introduced black and white inversion.

It is supposed to be used in the terminal, but when writing to the Web etc., it is basically a white background, so it is inverted with the -b option.

Image processing

It is necessary to increase the ** contrast ** in order to improve the finish with the shade AA.

If the contrast is low, ** black ** (space) and ** white ** (M) cannot be used, and the outline is not clear.

Since AA has a small number of density expression steps, it is difficult to know what the image is unless the outline is clear.

This time, due to the module, I simply increased the contrast and then grayscaled it, but it seems that a method to clarify the shade when converting to grayscale is also good.

cont = ImageEnhance.Contrast(img) #Create Pillow Enhancer object

img_gray = cont.enhance(2.5).convert('L') #Doubles contrast and converts to grayscale

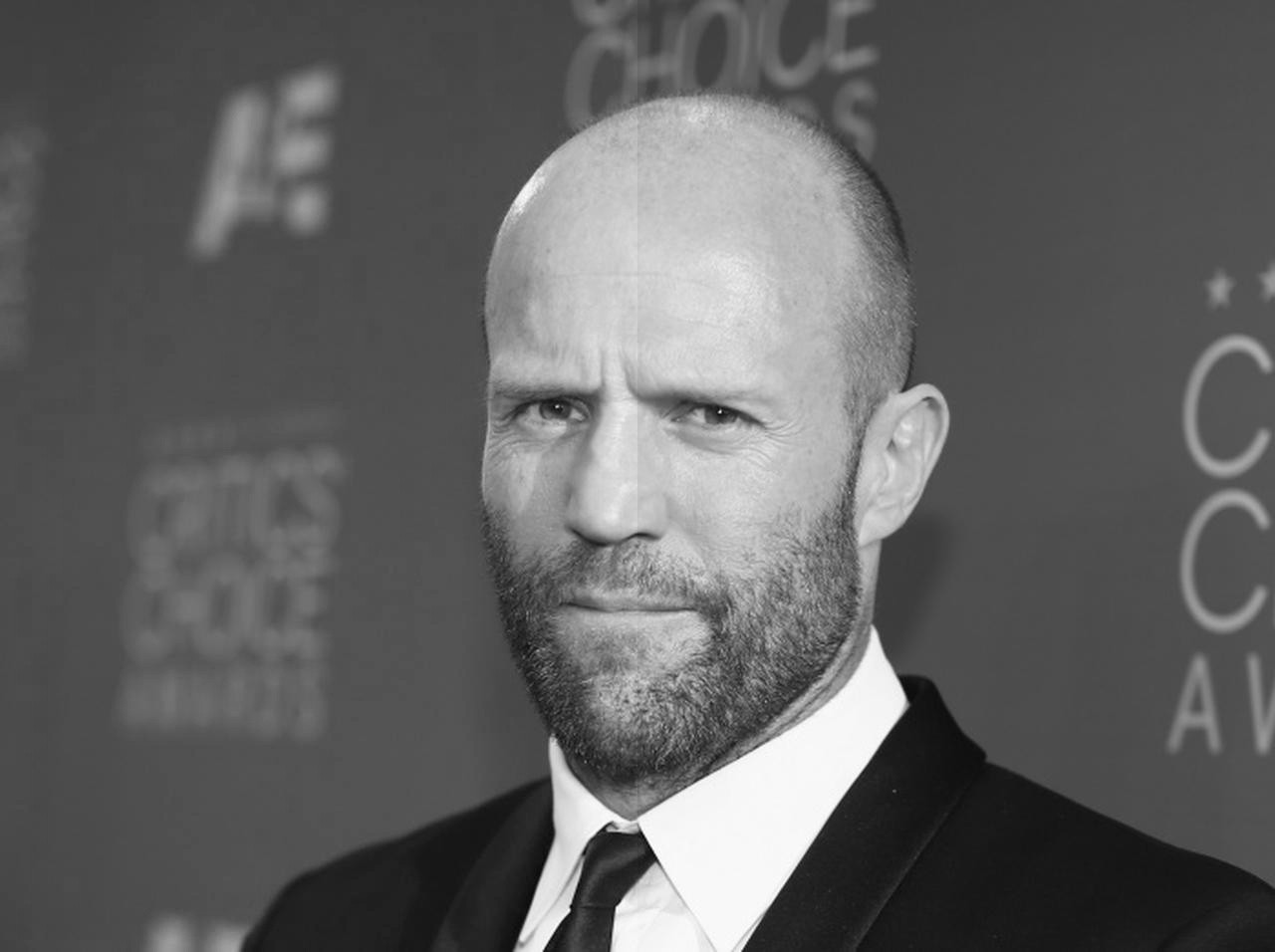

Deep grayscale I'm familiar with [this article](https://qiita.com/yoya/items/dba7c40b31f832e9bc2a), but it seems that there are various conversion methods even if it is called grayscale.  The one on the left is grayscaled with `Pillow`, and the one on the right is grayscaled with ** fading process ** of ʻOpenCV`. It's hard to see the difference in this image, but if you look around the suit and Y-shirt, you can see that ** black and white is emphasized ** on the right side. The one on the right seems to be a method that focuses on maintaining contrast. Simply put, grayscale is an attempt to express human color sensation in black and white shades, but for machines to recognize * * It seems that it is better to maintain contrast ** than human senses. It is interesting that this area seems to be deeply related to image recognition etc. as you dig deeper. Speaking of timely image recognition in me, it is `eye sight`, but that is probably a very complicated process. However, when I touch the end of image processing in this way, I think that it is simply a parameter adjustment if the complicated processing is also investigated. Because it is simple, it is a wonderful technology that can only be said to be the result of decades of experience, including peripheral programs.

Full text

If you have a library, you can copy it. I don't know if there is demand, but I have posted it on GitHub. `I want to make the terminal bloom! If you are a person, please try it.

jason2aa.py

#!/usr/bin/python3.7

from PIL import Image, ImageDraw, ImageFont, ImageEnhance

from bs4 import BeautifulSoup

import numpy as np

import requests

import tempfile

import os

import re

import sys

import random

is_direct = False

is_local = False

background = 'black'

color = 'white'

width = os.get_terminal_size().columns

font = ImageFont.truetype('DejaVuSansMono.ttf', 16)

characters = list('!"#$%&\'(*+,-./0123456789:;<=?@ABCDEFGHIJKLMNOPQRSTUVWXYZ[^_`abcdefghijklmnopqrstuvwxyz{|~ ')

def get_image(destination):

try:

html = requests.get(destination)

html.raise_for_status()

soup = BeautifulSoup(html.text,'lxml')

links = soup.find_all('img')

for i in range(10):

link = links[random.randrange(len(links))].get('src')

if re.match('https?://[\w/:%#\$&\?\(\)~\.=\+\-]+', link):

image = requests.get(link)

image.raise_for_status()

break

elif i >= 9:

raise Exception('Oh dear. Couldn\'t find any image.\nWhy don\'t you try with my name?')

except Exception as e:

print('Error: ', e)

sys.exit(1)

fd, temp_path = tempfile.mkstemp()

with open(temp_path, 'wb') as f:

f.write(image.content)

return temp_path

def normalize(l):

l_min = min(l)

l_max = max(l)

return [(i - l_min) / (l_max - l_min) * 255 for i in l]

def calc_density():

l = []

for i in characters:

im = Image.new('L', (24,24), background)

draw = ImageDraw.Draw(im)

draw.text((0,0), i, fill=color, font=font)

l.append(np.array(im).mean())

normed = normalize(l)

dict = {key: val for key, val in zip(normed, characters)}

return sorted(dict.items(), key=lambda x:x[0])

arg = sys.argv

if '-h' in arg or '-help' in arg:

print(' Okey, here is the fuckin\' help.\n'

'\n'

' Usase: jason2aa.py keyword keyword .. [option]\n'

'\n'

' [Options]\n'

' -w Width of AA (The number of characters, less than 10000)\n'

' -p Path to image file\n'

' -b Return Black and white reversed image\n'

' -h Show this help (or -help)\n'

'\n'

' If I was you, I\'m sure I can handle such a bullshit app without help.\n'

' Because, I\'m a true man.')

sys.exit(0)

if '-b' in arg:

b_index = arg.index('-b')

for i in range(1):

arg.pop(b_index)

background = 'white'

color = 'black'

if '-w' in arg:

w_index = arg.index('-w')

try:

w_temp = int(arg.pop(w_index + 1))

if w_temp > 0 and w_temp < 10000:

width = w_temp

else:

raise Exception

arg.pop(w_index)

except Exception:

print('Watch it! You passed me a invalid argument as width.\nI replaced it with default width.')

for i in range(1):

arg.pop(w_index)

if '-p' in arg:

p_index = arg.index('-p')

try:

path = arg.pop(p_index + 1)

arg.pop(p_index)

if re.match('https?://[\w/:%#\$&\?\(\)~\.=\+\-]+', path):

try:

image = requests.get(path)

image.raise_for_status()

fd, temp_path = tempfile.mkstemp()

with open(temp_path, 'wb') as f:

f.write(image.content)

is_direct = True

file_path = temp_path

except Exception as e:

raise Exception(e)

else:

if os.path.exists(path):

is_local = True

file_path = path

else:

raise Exception('There ain\'t any such file. Are you fucking with me?')

except Exception as e:

print(e, '\nThe above error blocked me accessing the path.\nBut, don\'t worry. Here is the my photo.')

url = 'https://www.google.com/search?q=Jason+Statham&tbm=isch&safe=off&num=100&pws=0'

file_path = get_image(url)

else:

if len(arg) <= 1:

arg = ['Jason', 'Statham']

else:

arg.pop(0)

url = 'https://www.google.com/search?q=' + '+'.join(arg) + '&tbm=isch&safe=off&num=100&pws=0'

file_path = get_image(url)

for i in range(10):

try:

img = Image.open(file_path)

except Exception:

print('Oh, fuck you! I couldn\'t open the file.\n'

'It\'s clearly your fault, cuz the path wasn\'t to image file.\n'

'But, possibly, it\'s caused by error like 404. Sorry.')

sys.exit(1)

if img.mode == 'RGB':

cont = ImageEnhance.Contrast(img)

img_gray = cont.enhance(2.5).convert('L').resize((width, int(img.height*width/img.width//2)))

break

elif is_local or is_direct:

print('Shit! I could only find only useless image.\nYou pass me files containing fuckin\' alpha channel, aren\'t you?.')

sys.exit(1)

elif i >= 9:

print('Damm it! I could only find only useless image.\nMaybe, fuckin\' alpha channel is contained.')

sys.exit(1)

else:

os.remove(file_path)

file_path = get_image(url)

maps = calc_density()

density_map = np.array([i[0] for i in maps])

charcter_map = np.array([i[1] for i in maps])

imarray = np.array(img_gray)

index = np.searchsorted(density_map, imarray)

aa = charcter_map[index]

aa = aa.tolist()

for i in range(len(imarray)):

print(''.join(aa[i]))

if not is_local:

os.remove(file_path)

sys.exit(0)

Summary

I'm an inexperienced person, so I played with Python for the first time.

I enjoyed writing in a very easy-to-use and familiar language.

You can find out how many AA's can be created automatically by searching the Web, but when I made it myself, it was interesting to see it from a different perspective, such as devising allocations.

Also, when I have time, I would like to write ** AA ** of ** line art system ** by making full use of CV2 and contour detection.

If you have any advice or suggestions, I would appreciate it if you could let me know. Until the end Thank you for reading!

One by one (I don't think so (laughs)) Thank you to a programmer who gave me the opportunity to do something a little playful. I think that articles on familiar topics (?) Like Jason Statham can be an opportunity for people who have nothing to do with programming to get a glimpse of the back side of software. It is inseparable from electronic devices, and in the present age where multiple technologies are intricately intertwined, it is very meaningful to touch a glimpse of the technologies that support us, even if we are not in a directly related position. I think. As I touch programming a little, I often feel that it has a great influence on the way I think about everyday things. (Qiita is a platform for programmers, so the purpose is completely different.) I hope that even such a low-level script can help someone. Thank you very much.

[^ 1]: The exact behavior is different, so please refer to the Official Document for details.

Recommended Posts