[PYTHON] [Translation] scikit-learn 0.18 Tutorial Introduction of machine learning by scikit-learn

Google translated http://scikit-learn.org/stable/tutorial/basic/tutorial.html. scikit-learn 0.18 Tutorial Table of Contents

Section content

This section introduces machine learning terms used in scikit-learn and provides simple learning examples.

Machine learning: problem setting

In general, learning problems consider a set of n data samples and try to predict the characteristics of unknown data. If each sample is larger than a single number, or if it is a multidimensional item (also known as a multivariate), it is said to have several attributes or features.

You can divide your learning questions into several categories:

--In Supervised Learning, the attributes you want to predict are added to the learning data (Click here. nazoking@github/items/267f2371757516f8c168#1-%E6%95%99%E5%B8%AB%E4%BB%98%E3%81%8D%E5%AD%A6%E7%BF%92) and scikit -learn Visit the supervised learning page). This issue can be one of the following: --Classification (https://en.wikipedia.org/wiki/Classification_in_machine_learning): A sample belongs to more than one class and how to predict the class of unlabeled data from already labeled data I want to learn. An example of a classification problem is handwriting recognition, the purpose of which is to assign each input vector to one of a finite number of discrete categories. Another way to think of classification is to have a limited number of categories and try to label each of the n samples provided with the correct category or class, stand-alone (not continuous). ) Continuous supervised learning. -Regression (https://en.wikipedia.org/wiki/Regression_analysis): If the desired output consists of one or more continuous variables, the task is called regression. An example of a regression problem is predicting salmon length as a function of age and weight. --In Unsupervised Learning, the learning data is a set of input vectors x with no corresponding target value. The goal of such problems is to discover a group of similar examples in the data called Clustering (https://en.wikipedia.org/wiki/Cluster_analysis) or Density Estimate (https: //). Go to a 2D or 3D space (Scikit-Learn unsupervised learning page) to determine the distribution of data in an input space called en.wikipedia.org/wiki/Density_estimation) or for high-dimensional visualization purposes. Click here (http://qiita.com/nazoking@github/items/267f2371757516f8c168#2-%E6%95%99%E5%B8%AB%E3%81%AA%E3%81%97] % E5% AD% A6% E7% BF% 92) Please.

** Training set and test set ** The purpose of machine learning is to learn some properties of a dataset and apply them to new data. A common way to evaluate algorithms in machine learning is to divide the data at hand into two sets. One is called the ** training set ** that learns the data characteristics, and the other is called the ** test set ** that tests the characteristics.

Load sample dataset

scikit-learn includes iris and digits for classification. -Based + Recognition + of + Handwritten + Digits) dataset, Boston Home Price dataset for regression, etc. Comes with several standard datasets.

The following launches the Python interpreter from the shell and loads the ʻirisanddigitsdatasets. In our notation,$represents the shell prompt and> >>` represents the Python interpreter prompt.

$ python

>>> from sklearn import datasets

>>> iris = datasets.load_iris()

>>> digits = datasets.load_digits()

A dataset is a dictionary-like object that holds all the data and some metadata about that data. This data is stored in the .data member. This is an array of n_samples, n_features. With supervised, one or more response variables are stored in the .target member. For more information on the different datasets, see the Dedicated Section (http://scikit-learn.org/0.18/datasets/index.html#datasets).

For example, for the digits dataset, digits.data accesses the features that can be used to classify the numeric samples.

>>> print(digits.data)

[[ 0. 0. 5. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 10. 0. 0.]

[ 0. 0. 0. ..., 16. 9. 0.]

...,

[ 0. 0. 1. ..., 6. 0. 0.]

[ 0. 0. 2. ..., 12. 0. 0.]

[ 0. 0. 10. ..., 12. 1. 0.]]

digits.target returns the truth value on which the digits dataset is based. This is the number that corresponds to the image of each number you are trying to learn.

>>> digits.target

array([0, 1, 2, ..., 8, 9, 8])

** Data array shape **

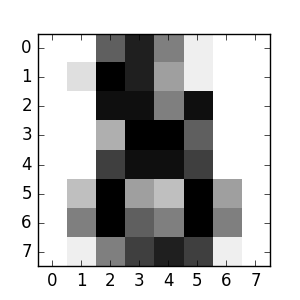

The data is always a two-dimensional array, shape (n_samples, n_features), even if the shape of the original data is different. For numbers, each original sample is an image of the shape (8,8) and can be accessed using:

>>> digits.images[0]

array([[ 0., 0., 5., 13., 9., 1., 0., 0.],

[ 0., 0., 13., 15., 10., 15., 5., 0.],

[ 0., 3., 15., 2., 0., 11., 8., 0.],

[ 0., 4., 12., 0., 0., 8., 8., 0.],

[ 0., 5., 8., 0., 0., 9., 8., 0.],

[ 0., 4., 11., 0., 1., 12., 7., 0.],

[ 0., 2., 14., 5., 10., 12., 0., 0.],

[ 0., 0., 6., 13., 10., 0., 0., 0.]])

This simple example dataset is It shows how you can form the data that scikit-learn consumes, starting from the original problem.

** Loading from an external dataset **

To load from an external dataset, see Loading an External Dataset (http://scikit-learn.org/0.18/datasets/index.html#external-datasets).

Learning and prediction

For the digits dataset, the task is to predict which numbers it represents, given the image. The Estimator (https://en.wikipedia.org/wiki/Estimator) is given a sample of 10 possible classes (numbers from zero to 9) so that you can predict which class the unknown sample belongs to. You can.

In scikit-learn, the estimator for classification is a Python object that implements the fit (X, y) and predict (T) methods.

An example estimator is the class sklearn.svm.SVC, which implements support vector classification. The estimator constructor takes model parameters as arguments, but for the time being we consider the estimator to be a black box:

>>> from sklearn import svm

>>> clf = svm.SVC(gamma=0.001, C=100.)

** Model parameter selection ** In this example, set the value of

gammamanually. Grid search and Cross validation etc. You can use the tools in to automatically find the appropriate values for your parameters.

I named the estimator instance clf because it is a classifier. You need to make the model fit. That is, you need to learn from the model. This is done by passing the training set to the fit method. As a training set, use all the images in the dataset except the last one. Select this training set with the [: -1] Python syntax. This will generate a new array containing everything but the last entry in digits.data.

>>> clf.fit(digits.data[:-1], digits.target[:-1])

SVC(C=100.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=None, degree=3, gamma=0.001, kernel='rbf',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

You can now predict new values. So which number is the last image in the digits dataset that you didn't use to train the classifier?

>>> clf.predict(digits.data[-1:])

array([8])

The corresponding images are:

As you can see, this is a difficult task. The image resolution is low. Do you agree with the classifier? A complete example of this classification problem is available as an example that you can run and learn. Handwritten digit recognition

Model persistence

It is possible to save scikit models using Python's built-in persistence module, pickle:

>>> from sklearn import svm

>>> from sklearn import datasets

>>> clf = svm.SVC()

>>> iris = datasets.load_iris()

>>> X, y = iris.data, iris.target

>>> clf.fit(X, y)

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=None, degree=3, gamma='auto', kernel='rbf',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

>>> import pickle

>>> s = pickle.dumps(clf)

>>> clf2 = pickle.loads(s)

>>> clf2.predict(X[0:1])

array([0])

>>> y[0]

0

In certain cases of scikit, it may be better to use the replacement of joblib pickle (joblib.dump and joblib.load). It's more efficient for large data, but it can only be saved to disk.

>>> from sklearn.externals import joblib

>>> joblib.dump(clf, 'filename.pkl')

You can later load the pickled model (perhaps in another Python process):

>>> clf = joblib.load('filename.pkl')

Note The

joblib.dumpandjoblib.loadfunctions also accept a file-like object instead of a filename. For more information on data persistence in Joblib, see here (https://pythonhosted.org/joblib/persistence.html).

Keep in mind that pickle has some security and maintainability issues. For more information on model persistence with scikit-learn, see Model Persistence.

Terms

The scikit-learn estimator follows certain rules to make its behavior more predictive.

Typecast

Input is cast to float64 unless otherwise specified:

>>> import numpy as np

>>> from sklearn import random_projection

>>> rng = np.random.RandomState(0)

>>> X = rng.rand(10, 2000)

>>> X = np.array(X, dtype='float32')

>>> X.dtype

dtype('float32')

>>> transformer = random_projection.GaussianRandomProjection()

>>> X_new = transformer.fit_transform(X)

>>> X_new.dtype

dtype('float64')

In this example, X is float32 and is cast to float64 byfit_transform (X).

The regression target is cast to float64 and the classification target is maintained.

>>> from sklearn import datasets

>>> from sklearn.svm import SVC

>>> iris = datasets.load_iris()

>>> clf = SVC()

>>> clf.fit(iris.data, iris.target)

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=None, degree=3, gamma='auto', kernel='rbf',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

>>> list(clf.predict(iris.data[:3]))

[0, 0, 0]

>>> clf.fit(iris.data, iris.target_names[iris.target])

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=None, degree=3, gamma='auto', kernel='rbf',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

>>> list(clf.predict(iris.data[:3]))

['setosa', 'setosa', 'setosa']

Here, the first predict () returns an integer array because ʻiris.target(integer array) was used infit. The second predict () returns a string array. Because ʻiris.target_names was for fitting.

Parameter update and update

The hyperparameters of the estimator are the sklearn.pipeline.Pipeline.set_params method. You can update it after creating it using. Calling fit () multiple times overwrites what was learned by the previous fit ():

>>> from sklearn.svm import SVC

>>> rng = np.random.RandomState(0)

>>> X = rng.rand(100, 10)

>>> y = rng.binomial(1, 0.5, 100)

>>> X_test = rng.rand(5, 10)

>>> clf = SVC()

>>> clf.set_params(kernel='linear').fit(X, y)

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=None, degree=3, gamma='auto', kernel='linear',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

>>> clf.predict(X_test)

array([1, 0, 1, 1, 0])

>>> clf.set_params(kernel='rbf').fit(X, y)

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=None, degree=3, gamma='auto', kernel='rbf',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

>>> clf.predict(X_test)

array([0, 0, 0, 1, 0])

Here, the default kernel rbf is first changed linearly after the estimator was built viaSVC ()and then back to rbf to reconfigure the estimator and make a second prediction. Do.

Multi-class and multi-label fitting

When using the Multiclass Classifier (http://scikit-learn.org/0.18/modules/classes.html#module-sklearn.multiclass), the training and prediction tasks performed will be in the format of the target data. Depends.

>>> from sklearn.svm import SVC

>>> from sklearn.multiclass import OneVsRestClassifier

>>> from sklearn.preprocessing import LabelBinarizer

>>> X = [[1, 2], [2, 4], [4, 5], [3, 2], [3, 1]]

>>> y = [0, 0, 1, 1, 2]

>>> classif = OneVsRestClassifier(estimator=SVC(random_state=0))

>>> classif.fit(X, y).predict(X)

array([0, 0, 1, 1, 2])

In the above case, the classifier fits into a multi-labeled one-dimensional array, so the predict () method provides the corresponding multi-class prediction. It is also possible to fit into a two-dimensional array of binary label indicators:

>>> y = LabelBinarizer().fit_transform(y)

>>> classif.fit(X, y).predict(X)

array([[1, 0, 0],

[1, 0, 0],

[0, 1, 0],

[0, 0, 0],

[0, 0, 0]])

Here, the classifier uses LabelBinarizer to make the two dimensions of y. Fit () to the binary label representation. In this case, predict () returns a two-dimensional array representing the corresponding multi-label prediction.

The 4th and 5th instances all return 0, indicating that none of the 3 labels fit. With multi-label output, it is possible to assign multiple labels to an instance as well.

>> from sklearn.preprocessing import MultiLabelBinarizer

>> y = [[0, 1], [0, 2], [1, 3], [0, 2, 3], [2, 4]]

>> y = preprocessing.MultiLabelBinarizer().fit_transform(y)

>> classif.fit(X, y).predict(X)

array([[1, 1, 0, 0, 0],

[1, 0, 1, 0, 0],

[0, 1, 0, 1, 0],

[1, 0, 1, 1, 0],

[0, 0, 1, 0, 1]])

In this case, the classifier fits into an instance, each assigned multiple labels. MultiLabelBinarizer is used to evolve a multi-labeled 2D array into two. I will. As a result, predict () returns a two-dimensional array with multiple predictive labels per instance.

© 2010 --2016, scikit-learn developers (BSD license).

Recommended Posts