Festive scraping with Python, scrapy

Introduction

This article uses a scraping framework called scrapy for web scraping in python.

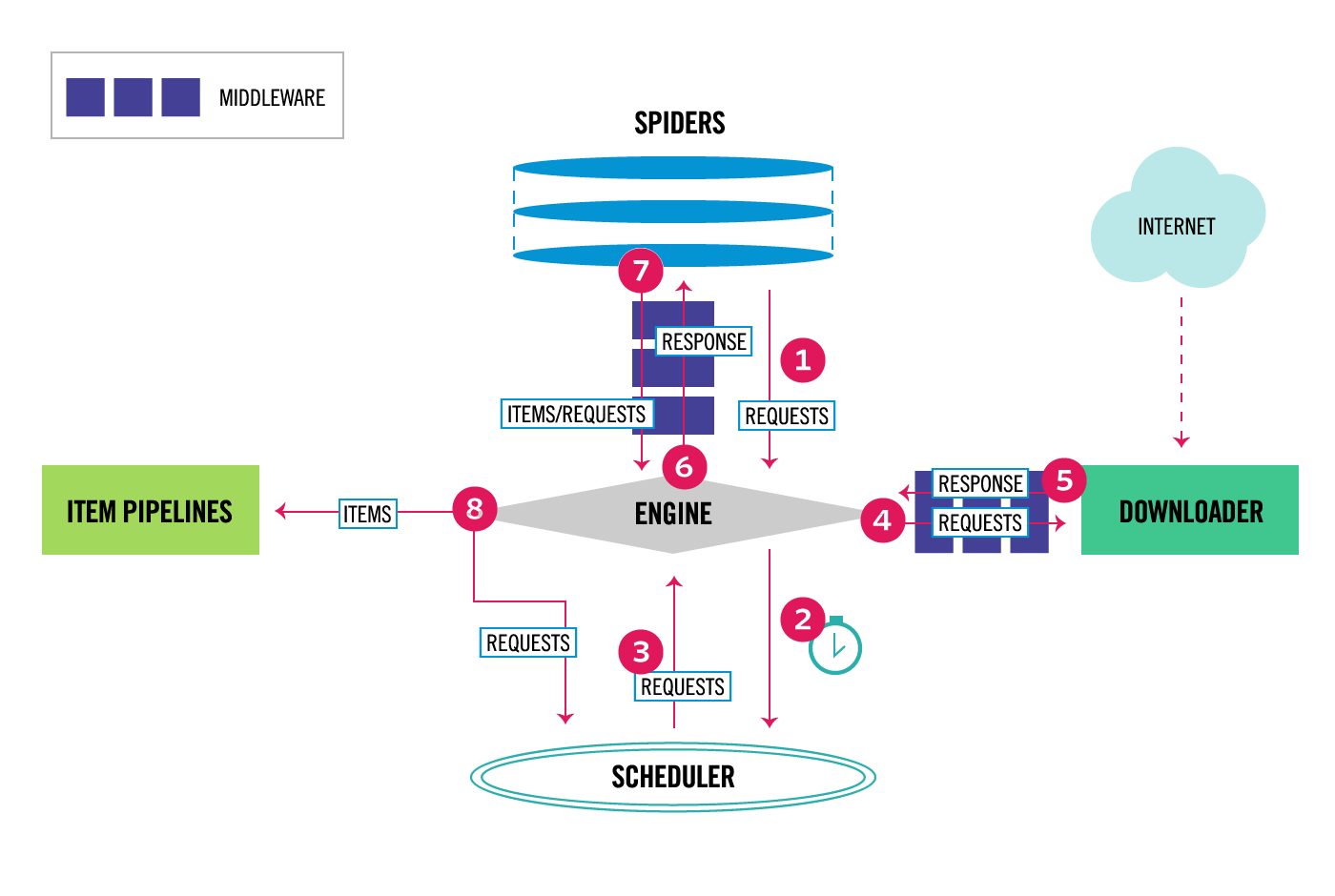

Scrapy architecture

About the Framework Overview (https://doc.scrapy.org/en/latest/topics/architecture.html)

data flow

Components

Scrapy Engine

Control each component as shown in the Data Flow diagram at the top.

Scheduler

Receives requests from Scrapy Engine, queues them, and retrieves them from later requests from Scrapy Engine.

Downloader

The Downloader retrieves the web page, feeds it to the Scrapy Engine, and passes it to Spider.

Spiders

Spiders are custom classes that allow Scrapy users to parse the response and extract an Item (scraped item) or any additional requests that follow.

I mainly customize here myself.

Item Pipeline

The Item Pipeline handles the Item once it has been extracted by Spider. Typical tasks include cleansing, validation, and persistence (such as storing items in a database).

You can write it to a json file or save it to a DB.

Downloader middlewares

Downloader middlewares, located between Scrapy Engine and Downloader, are specific hooks that handle requests passed by Scrapy Engine to Downloader and responses passed by Downloader to Scrapy Engine.

I will not use it this time.

Spider middlewares

Spider middlewares are specific hooks that sit between the Scrapy Engine and Spider and can handle Spider inputs (responses) and outputs (Items and requests).

I will not use it this time.

Install Requirements

Library required for installation

python-dev,zlib1g-dev,libxml2-devandlibxslt1-devare required forlxmllibssl-devandlibffi-devare required forcryptography

Implementation procedure / image of festival scraping app

--Building a local development environment using Docker --Creating a project --Slaping implementation --Output the result as a json file

Let's, Enjoy Tokyo was targeted for scraping.

Creating a Dockerfile

FROM python:3.6-alpine

RUN apk add --update --no-cache \

build-base \

python-dev \

zlib-dev \

libxml2-dev \

libxslt-dev \

openssl-dev \

libffi-dev

ADD pip_requirements.txt /tmp/pip_requirements.txt

RUN pip install -r /tmp/pip_requirements.txt

ADD ./app /usr/src/app

WORKDIR /usr/src/app

pip_requirements.txt looks like this

# Production

# =============================================================================

scrapy==1.4.0

# Development

# =============================================================================

flake8==3.3.0

flake8-mypy==17.3.3

mypy==0.511

Build Docker image

docker build -t festival-crawler-app .

Start Docker container

#Run directly under the working directory

docker run -itd -v $(pwd)/app:/usr/src/app \

--name festival-crawler-app \

festival-crawler-app

Enter the started container

docker exec -it festival-crawler-app /bin/sh

Creating a project

# scrapy startproject <project_name> [project_dir]

scrapy startproject scraping .

The created directory looks like the following.

Before creating a project

├── Dockerfile

├── app

└── pip_requirements.txt

After creating the project

.

├── Dockerfile

├── app

│ ├── scraping

│ │ ├── __init__.py

│ │ ├── items.py

│ │ ├── middlewares.py

│ │ ├── pipelines.py

│ │ ├── settings.py

│ │ └── spiders

│ │ └── __init__.py

│ └── scrapy.cfg

└── pip_requirements.txt

settings.py settings

You can set how long Downloader waits for consecutive pages to download from the same website.

__ Be sure to set this value so that it does not overload the scraped website. __

Check the robots.txt of the target site, and if Crawl-delay is specified, it seems good to specify that value.

#DOWNLOAD_DELAY = 10 # sec

Implementation of app / scraping / items.py

# -*- coding: utf-8 -*-

import scrapy

from scrapy.loader.processors import (

Identity,

MapCompose,

TakeFirst,

)

from w3lib.html import (

remove_tags,

strip_html5_whitespace,

)

class FestivalItem(scrapy.Item):

name = scrapy.Field(

input_processor=MapCompose(remove_tags),

output_processor=TakeFirst(),

)

term_text = scrapy.Field(

input_processor=MapCompose(remove_tags, strip_html5_whitespace),

output_processor=TakeFirst(),

)

stations = scrapy.Field(

input_processor=MapCompose(remove_tags),

output_processor=Identity(),

)

The Item class defines the data to be handled by scraping. This time, we will define the following data.

--Festival name --Holding period --Nearest station

input_processor and ʻoutput_processor` are very convenient because they can accept and process data at the time of output, respectively.

Implementation of the app / scraping / spider / festival.py file

# -*- coding: utf-8 -*-

from typing import Generator

import scrapy

from scrapy.http import Request

from scrapy.http.response.html import HtmlResponse

from scraping.itemloaders import FestivalItemLoader

class FestivalSpider(scrapy.Spider):

name: str = 'festival:august'

def start_requests(self) -> Generator[Request, None, None]:

url: str = (

'http://www.enjoytokyo.jp'

'/amuse/event/list/cate-94/august/'

)

yield Request(url=url, callback=self.parse)

def parse(

self,

response: HtmlResponse,

) -> Generator[Request, None, None]:

for li in response.css('#result_list01 > li'):

loader = FestivalItemLoader(

response=response,

selector=li,

)

loader.set_load_items()

yield loader.load_item()

The implementation here is a description of the actual scraping.

The class variable name: str ='festival: august' is the name of the command to execute from the command line. By writing the above, you can perform scraping at scrapy crawl festival: august.

Implementation of app / scraping / itemloaders.py

# -*- coding: utf-8 -*-

import scrapy

from scrapy.loader import ItemLoader

from scraping.items import FestivalItem

class FestivalItemLoader(ItemLoader):

default_item_class = FestivalItem

def set_load_items(self) -> None:

s: str = '.rl_header .summary'

self.add_css('name', s)

s = '.rl_main .dtstart::text'

self.add_css('term_text', s)

s = '.rl_main .rl_shop .rl_shop_access'

self.add_css('stations', s)

The above may not be the correct usage, but I thought it would be confusing to list the css selectors in festival.py, so I defined it in ʻItemLoader. The set_load_items method is a method added this time that does not exist in the ʻItemLoader class.

Scraping command

The output can be specified with the -o option.

If you want to save it in DB etc., you need to implement it separately.

scrapy crawl festival:august -o result.json

Now you can get the festival information for August

[

{

"name": "ECO EDO Nihonbashi Art Aquarium 2017 ~ Edo / Goldfish Ryo ~ & Night Aquarium",

"stations": [

"Shin-Nihombashi Station"

],

"term_text": "2017/07/07(Money)"

},

{

"name": "Milky Way Illuminations ~ Seien Campaign ~",

"stations": [

"Akabanebashi Station (5-minute walk)"

],

"term_text": "2017/06/01(wood)"

},

{

"name": "Fireworks Aquarium by NAKED",

"stations": [

"Shinagawa Station"

],

"term_text": "2017/07/08(soil)"

}

...

]

Normally, it is necessary to properly create paging and parsing of date and time data, but for the time being, I was able to do festival scraping softly.

Please refer to the source of festival scraping below.

that's all.

Recommended Posts