[PYTHON] Use Docker and Jupyter as execution environment distribution means for data analysis / visualization related workshops

Easy-to-reproducible data analysis and visualization environment

At work, I sometimes give lectures on how to use various tools, but the problem that hurts me at this time is how to distribute the sample and tutorial execution environment prepared here. Normally, when executing one data analysis workflow, it is rare that only a plain Python or R execution environment is required. In most cases, Python is SciPy / NumPy / Pandas, and lectures using R for biologists are [Bioconductor](http://www.bioconductor. You need to install a standard tool such as org /) and then install additional libraries to run the desired application. Of course, you can distribute a virtual machine file packed with everything, but there are problems such as increasing the fill size to be distributed by yourself and how to save / automate the steps required to create the virtual machine.

Fortunately, recently, by combining various tools, it has become possible to reproduce the entire environment for performing such relatively complicated tasks. The purpose of this article is to share the experimental method I recently tried in Tutorial of an academic society. We would appreciate it if you could point out problems and provide feedback on improvements. __ This method is intended to be used for various data analysis and visualization workshops and tutorials, but with some modifications it can also be used as a starter kit for hackathons __.

Align the environment of participants

If you want to do any tutorial, you need to unify the environment of the participants. This is good if all the machines can be prepared in advance by the organizer, but if you bring your own machines, it is a very troublesome problem if the software dependencies are complicated or the installation itself is not easy. On the day of the event, "I worked on my machine ..." often happens. To solve this, we combined the following tools.

GitHub We use GitHub as a way to distribute the environment and code. In the case of virtual machine distribution, this is not possible due to file size issues, but you can avoid this by using Docker, which is introduced next.

Docker

Docker is used for environment distribution for analysis and visualization including dependencies. A lot of convenient environments are distributed through Docker Hub, and you can use them as they are, but in reality, there are some dependencies. Will be added. It is a great advantage to be able to build a new one by inheriting the public environment.

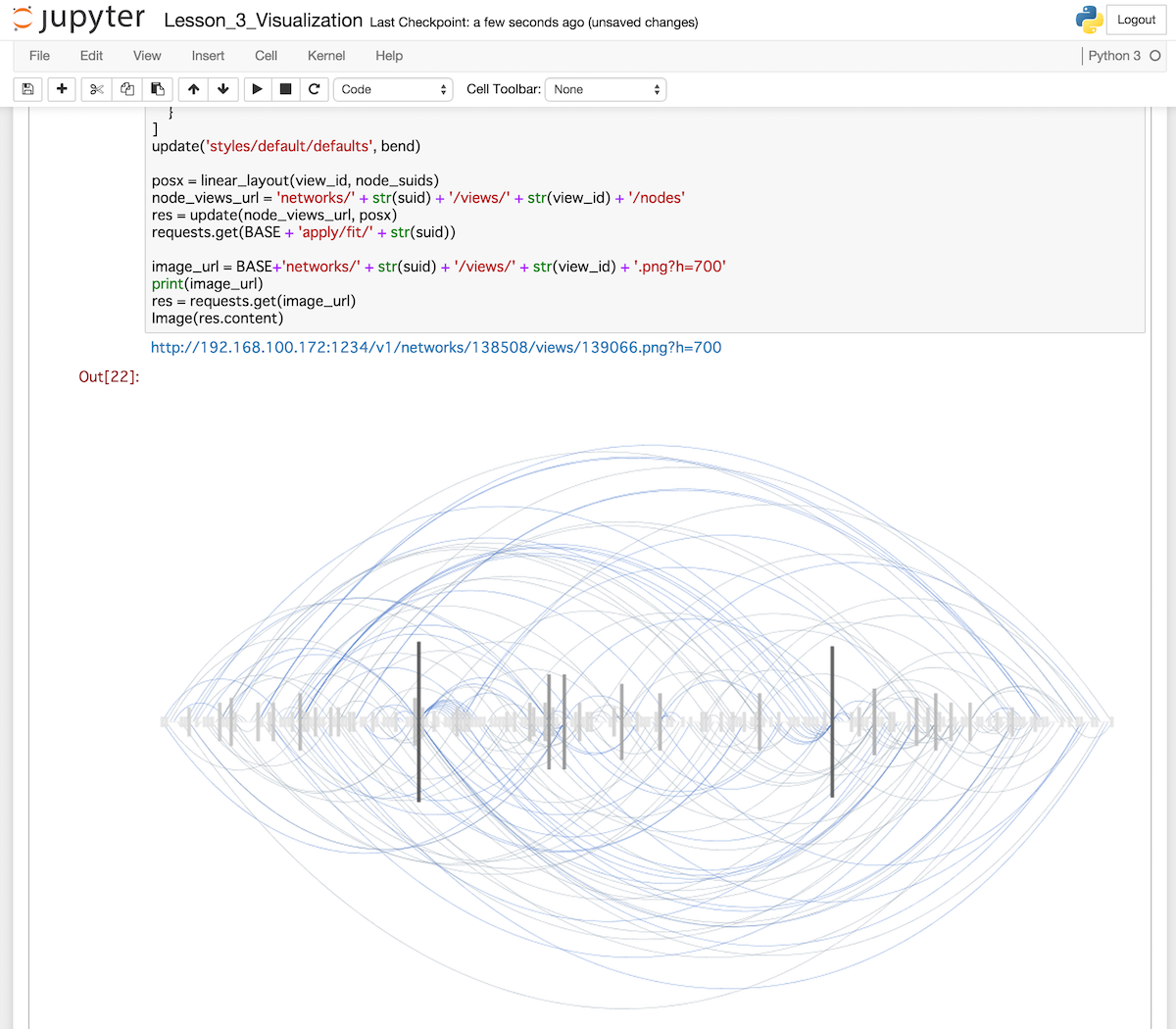

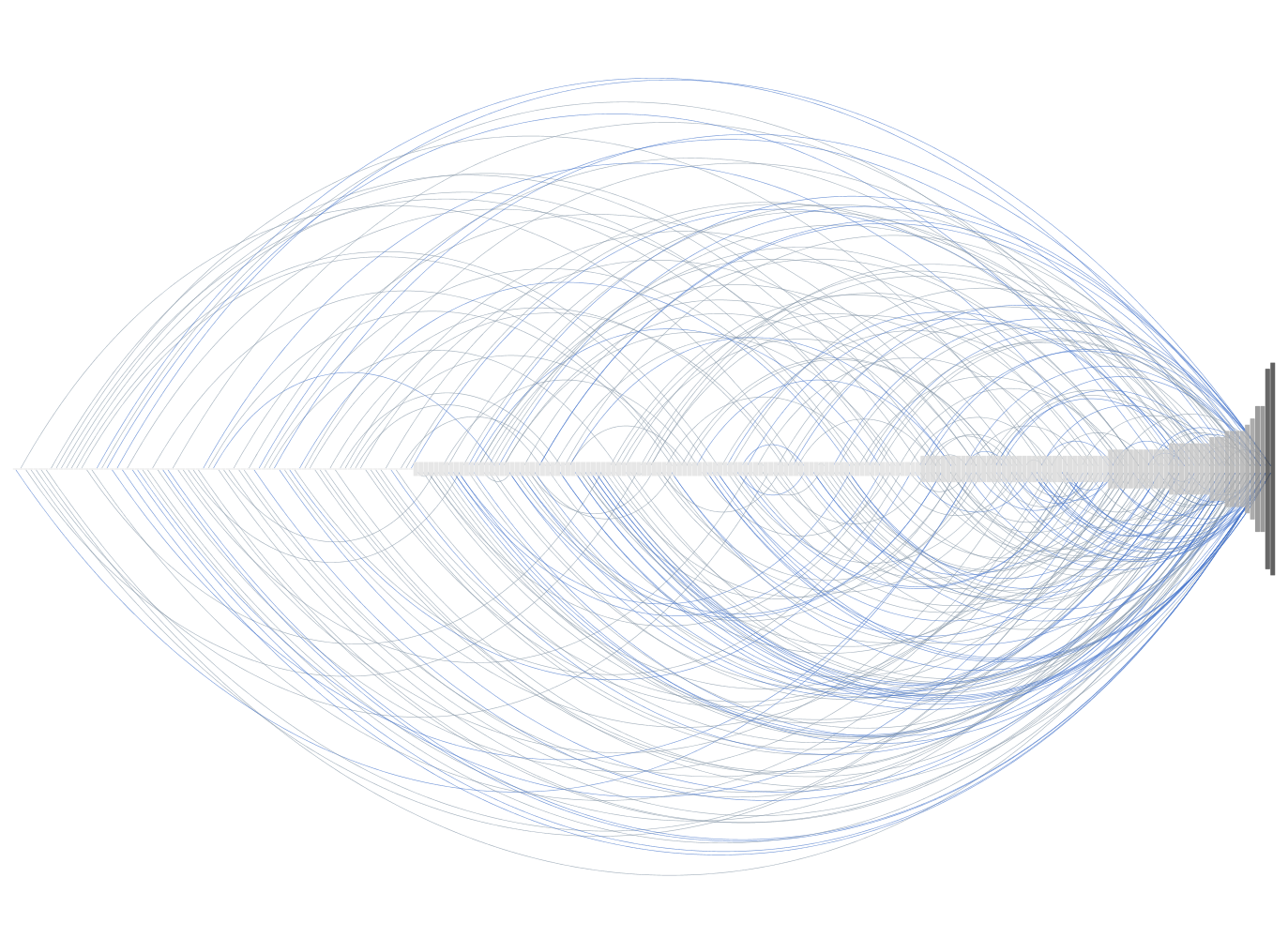

IPython Notebook / Jupyter This time, I introduced the visualization of graph data using Python, so I used IPython Notebook as a means of distributing sample code. There are many articles about IPython Notebook (from the next version, the notebook part and the language kernel part will be independent, and the notebook part will be called Jupyter). Please refer to that. In addition, there is a Docker Hub repository managed by the IPython project itself, and [an image with SciPy, NumPy, Pandas, etc. pre-installed](https://registry.hub.docker.com/u/ipython/ Since scipyserver /) is prepared, by specifying this as the parent and writing the Dockerfile, the minimum addition is required.

Actual procedure

Target audience

This time, people who worked in research institutes and government agencies were the center of the audience. In other words, people who do programming as a means but are not full-time programmers. So if you're targeting programmers, I think some of the steps below can be omitted.

1. Announcements and software installations for students

In order to focus on the essential parts of the lecture and not waste time installing, first contact participants to have the required software installed on their laptop in advance. The advantage of this method is that you can handle any workflow simply by having the following two installed.

Git This was unavoidable as we distributed what we needed through GitHub. This is also an overhead, but it is difficult to simplify this part, so I would like you to remember this as knowledge that can be used in the future. However, specifically, if you can fork and clone the existing GitHub repository, there is no problem for the time being.

Github account

Fortunately everyone had an account this time, so it went smoothly, but I should tell you that you have an account in advance so that you do not forget this too.

Docker and Boot2docker

Installing Docker should be easy for anyone with some computer literacy. This time the lecture was in English, so I told him to refer to this video. There is a charge, but the first three chapters, including installation instructions, are available for free:

Also, the official documentation is extensive, so if you refer to it, you should almost certainly be able to set it up. Most participants often say Windows or Mac, so it's important to test the sample on three platforms, including Linux. This time around 90% of the participants were Macs, so it was easy for me personally.

2. Prepare a GitHub repository for sample code distribution

First, prepare a GitHub repository as a container for everything you use for your lecture, except for large data files. Pack what you need there.

In the case of a lecture like this one, I think the basic structure of the repository is as follows.

~/g/vizbi-2015 git:master ❯❯❯ tree

.

├── Dockerfile

├── LICENSE

├── README.md

├── graph-tool-pub-key.txt

└── tutorials

├── Lesson_0_IPython_Notebook_Basics.ipynb

├── Lesson_1_Introduction_to_cyREST.ipynb

├── Lesson_2_Graph_Libraries.ipynb

├── Lesson_3_Visualization.ipynb

├── answers

│ └── Lesson_1_Introduction_to_cyREST_answer.ipynb

├── data

│ ├── galFiltered.gml

│ ├── sample.dot

│ └── yeast.json

└── graph-tool-test.ipynb

3 directories, 13 files

Let's look at the specific content.

Dockerfile It is for setting the image used in the lecture. Strictly speaking, it's okay to deploy the image towards Docker Hub without it, but if participants later want to extend their own, they can immediately build their own new build. It's a good idea to distribute it together.

README.md First, let's write the steps up to the point where you can run the container as a quick start guide. You also need a link to the detailed documentation. Since it will be fixed frequently, I will use the Wiki on GitHub etc. for the document.

Notebook-only directory (tutorials)

It's a good idea to keep your .ipynb files together to keep your top-level directories clean. Save the viable notes for use in the actual workshop here.

Data directory (tutorials / data)

If the data used in the workshop is very small, put it in the repository as well. I think there are various opinions about what makes it big, but when it comes to megabytes of data, it is safe to consider a distribution method other than managing it on GitHub. In the case of gigabytes, it is primitive, but it is safe to use a USB memory. Have them copy and rotate one by one before the start.

3. Testing in a real environment

I'm not going to learn deeply about Docker, as I'm focusing on the fact that multiple people can easily share the same environment. It is enough to tell you that it is a portable and extensible execution environment. However, if you do not convey the following concepts firmly, it will be confusing.

Data Volumes Since it is assumed that you will learn by rewriting the contents of the note by yourself, mount the Data Volume of the host with the -v option and use it. Be sure to read this section to learn more about this feature first.

Confirm that the editing from the container side and the addition of files from the host side are reflected in real time by having them enter the cloned directory and execute it with the following options. Get it

-v $PWD:/notebook

__Note: If you are using Boot2Docker, the -v option can only mount the / Users (Mac OS X) or C: \ Users (Windows) directories __. Please note that there were other people who were addicted to not being able to mount the current directory by specifying it. For Linux there are no restrictions.

Then try running all the notebooks to see if there are any problems. If you can work according to the quick start and execute without error, there is no problem.

4. On the day

I think the lecture will go back and forth between slides and notebooks. It is convenient to use a virtual desktop (Mission Control on Mac) to switch between the desktop for sliding and the desktop for executing notes.

The content isn't very general and may not be helpful, but here are the slides and notes:

- VIZBI 2015 Tutorial: Cytoscape, IPython, Docker, and Reproducible Network Data Visualization Workflows

- Notebook and Dockerfile

problem

I've tried this procedure and noticed some issues, so make a note of it.

Docker installation

Installing Docker is fairly easy, and although well-maintained documentation and videos are available, there were still some participants who stumbled upon the installation. It seems that anyone can install it completely unless it can be used by double-clicking the installer or using a package manager such as brew / apt-get with zero settings.

In this case, there was a person who did not understand the part of adding an export statement to .bashrc etc. and adding some environment variables in relation to boot2docker.

Required skills and final goal

Unfortunately, due to lack of prerequisite knowledge, there was one person who could not follow at all. Unfortunately, these mismatches can occur, so it's important to make sure you know your ultimate goal and the knowledge you absolutely need.

Image pull

As you know, running Docker itself is very light, but the first time you run it, it takes a lot of time to download all the required dependencies from the Docker Hub repository. For this reason, I asked the participants to just execute the image distributed as homework with the run command, but I did not convey the necessary meaning firmly, so it was very difficult to start the container at the venue. Some people took time. This time, the laboratory network was fairly fast, so it was pretty good, but there are cases where this is not possible due to bandwidth restrictions. In the Docker installation document, it is confirmed that it works by logging in to the shell of the ubuntu container, but in addition, you should tell it to execute the image to be used on the day in advance.

Summary

It was my first time to use this method, so I was worried whether it would work, but I managed to finish it. I will continue to search for more efficient and reliable methods through tutorials and so on.

Recommended Posts