Linux non-volatile memory (NV DIMM support (2019 version supplement)

Introduction

(This article is the article on the 24th day of Fujitsu Advent Calendar 2019. The article is an individual opinion and does not represent the organization. There is none.)

That's why I decided to write about NVDIMM, a non-volatile memory, this year as well. The reason for the supplement is that I have already talked about the latest situation in the lecture in December this year, and I intend to supplement the small story that was not included in the time of the lecture.

To be honest, I didn't do almost anything for NVDIMM in 2019 due to business reasons. However, probably because I've been writing about NVDIMM three times in the past, I feel like I've become completely seen as an NVDIMM person these days. Thanks to that, the voices of lectures have come to be heard from university professors who have read past articles. Thank you. I have experienced for myself that when I put the Output out, it bounces back in a good direction for me, and I am deeply moved.

- Lecture at Big Data Infra Study Group in March 2019

- December 2019 Lecture at Comsys 2019 of IPSJ

The following is the material for the former in March and the material for the IPSJ Comsys 2019 in December. In December, the latest status in the second half is updated. Non-volatile memory (NVDIMM) and Linux support trends Non-volatile memory (NVDIMM) and Linux support trends (for comsys 2019 ver.)

So, before this article, please refer to the above (especially the material for December) for the latest trends of this year. Here, I would like to supplement the stories that did not fit in the 45 minutes of the lecture in December and the points that I did not notice.

Regarding non-volatile memory, this year's commentary material by NAOMASA MATSUBAYASHI How to interact with memory that does not disappear even when the power is turned off However, the materials are also open to the public. The usage of libpmemobj of PMDK is more detailed than my explanation, so you should refer to this as well.

1. Kernel function that uses non-volatile memory as volatile memory

Dave Hansen has developed a kernel feature for using NVDIMMs as large amounts of RAM. https://lkml.org/lkml/2019/1/24/1346

There was also an expectation that NVDIMMs would like to use non-volatile memory in the same way as conventional RAM, focusing only on the large capacity of non-volatile memory rather than the inherent non-volatile nature. For this reason, Intel has provided the following methods so far. A) Memory Mode Mode is to make the RAM cache and make the NVDIMM area look like a normal Memory area depending on the hardware / farm settings. (On the other hand, the mode that makes NVDIMMs really look like non-volatile memory is called App Direct Mode).

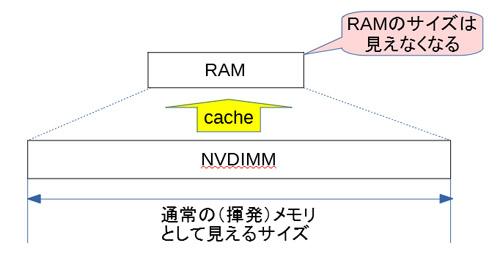

The advantage of Memory Mode is that you don't have to make any software changes. However, since RAM is used as a cache, the amount of RAM becomes invisible as the memory size, and the capacity of NVDIMM becomes the memory size as it is as shown in the figure. In addition, since it is a cache, changes in latency when a cache miss occurs cannot be predicted by the software, and it is also a drawback that it cannot be controlled.

B) Use like NVDIMM volatile memory by library

(Original) It is a method to use the NVDIMM area allocated as DAX at the library level as volatile memory like libvmem of PMDK. Of course, if you don't use these libraries, you can't use them as volatile memory, so middleware and apps need to support this library. For this reason, using it is a bit tedious.

That's where Dave's patch came in.

C) Show NVDIMM as volatile memory at kernel layer

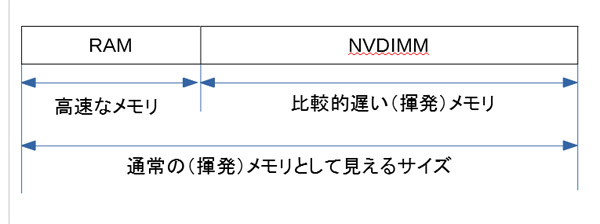

According to this, it seems that the area formatted as Device DAX is once separated, and it is memory hotadded to make it appear as RAM. This makes the NVDIMM appear to the user as a bit slower RAM.

The advantages of this method are as follows.

- Unlike Memory Mode, both RAM and NVDIMM can be used as normal volatile memory, so the amount of memory that can be used as volatile memory is larger than in Memory Mode.

- NVDIMM appears as a separate node of NUMA, so you can switch between using RAM and NVDIMM using numa tl etc. Data with high update frequency can be placed on the RAM side, and data with low update frequency but large can be placed on the NVDIMM side.

In particular, the latter feature can be said to be a welcome feature for those who care about the durability of NVDIMMs.

According to the last patch of this series of emails, the usage seems to be available once you create an area for device DAX and specify as follows.

To make this work, management software must remove the device from

being controlled by the "Device DAX" infrastructure:

echo -n dax0.0 > /sys/bus/dax/drivers/device_dax/remove_id

echo -n dax0.0 > /sys/bus/dax/drivers/device_dax/unbind

and then bind it to this new driver:

echo -n dax0.0 > /sys/bus/dax/drivers/kmem/new_id

echo -n dax0.0 > /sys/bus/dax/drivers/kmem/bind

2. PMDK's volatile memory library is removed from PMDK

By the way, how many people have noticed the description "(original)" in the above PMDK's volatile memory library? It seems that PMDK's libvmem and libvmemalloc eventually left the PMDK and became separate repositories. Intel used to say "I want you to use memkind" for this libvmem usage, but it seems that it has finally been removed from the PMDK itself.

https://pmem.io/2019/10/31/vmem-split.html

It seems that memkind is not supported on Windows, so the existence of libvmem still makes sense. However, on Linux it seems better to use memkind (or use the above kernel features) than at least libvmem.

- dm-writecache

It seems that the ability to cache data using NVDIMM at the Device mapper layer has been merged in kernel 4.18. The kernel already had a function to cache using SSD called dm-cache from around v3.10, but dm-writecache is characterized by being able to cache to NVDIMM in addition to high-speed SSD like NVMe. You can say that. The NVDIMM use case may be a classic use, rather than caching slow storage media. However, it seems that it is controlling in units of CPU cache instead of data guarantee in units of blocks like btt, and it seems that it is performing control more suitable for NVDIMM, so it is faster than dm-cache. Can be expected.

Below is Huaisheng Ye's presentation material, which is a good way to get an overview. There are many figures and you should be able to understand what it looks like. Dm-writecache: a New Type Low Latency Writecahe Based on NVDIMM

In the past dm-cache, it is write through by default and write back seems to be an option, whereas in dm-write cache it seems to be write back (I also saw an article that there is no write through). According to the figure in the document, read does not cache and basically goes directly to the HDD. Also, it seems that only the data cached by write is read when hit at the time of read, but if it is correct, read is left to the page cache mechanism of the existing kernel, and the data to be written It can be said that it is a very diligent construction that guarantees as soon as possible.

According to the material, it seems to set as follows

# lvcreate -n hdd_wc -L 4G vg /dev/sda1 (Device to be cached)

# lvcreate -n cache_wc -L 1G vg /dev/pmem0 (Device name of NVDIMM namespace set as Filesystem DAX)

# lvchange -a n vg/cache_wc

# lvconvert --type writecache --cachevol cache_wc vg/hdd_wc

Also, since you can use the namespace of Filesystem DAX instead of the namespace of sector mode used as a block device, it seems that NVDIMM is ensuring optimal access. The document also includes a performance comparison when NVDIMM is set to sector mode and used in dm-cache in Filesystem-DAX mode and used in dm-write cache, and an example of applying this as Ceph's OSD. I am.

If I have time, I would like to touch this for a moment and verify it.

Summary

I've added a little bit about what I couldn't talk about at Comsys 2019. I really wanted to give it a try in an emulation environment, but this time it's out of time. I would like to try each one again when I have time.

It's hard to say that the medium is still readily available, but Linux continues to evolve little by little every year for NVDIMMs. Let's look forward to the trends in 2020.