Scraping in Python-Introduction to Scrapy First 2nd Step

background

I recently learned about the existence of Scrapy, a scraping framework made by Python, and it was the strongest when I touched it. The specific good points are as follows.

- Scraping process can be written with only a few settings + a brief description

- Since it is program-based, you can write complicated processing when it comes to it.

- Since it is linked with a cloud service called Scrapy Cloud, you can deploy and execute the created crawler with a single command.

- Easy to scale with cloud services, with scheduling, statistics and monitoring

The following articles will be very helpful for the overview and introduction.

- Scrapy + Scrapy Cloud for Comfortable Python Crawling + Scraping Life-Gunosy Data Analysis Blog

- [How to make a crawler that can be used in practice without hassle --ITANDI BLOG](http://tech.itandi.co.jp/2016/08/%E6%89%8B%E9%96%93%E3%82] % 92% E3% 81% 8B% E3% 81% 91% E3% 81% 9A% E3% 81% AB% E5% AE% 9F% E5% 8B% 99% E3% 81% A7% E4% BD% BF % E3% 81% 88% E3% 82% 8B% E3% 82% AF% E3% 83% AD% E3% 83% BC% E3% 83% A9% E3% 83% BC% E3% 81% AE% E4 % BD% 9C% E3% 82% 8A% E6% 96% B9 /)

Purpose of this article

The above article is sufficient for the outline and introduction, but I have summarized some additional points that I need when I try to do various things myself. I didn't dare to write the contents in the above article, so I think it's a good idea to read it first.

Official Reference introduces the relevant page and supplements it.

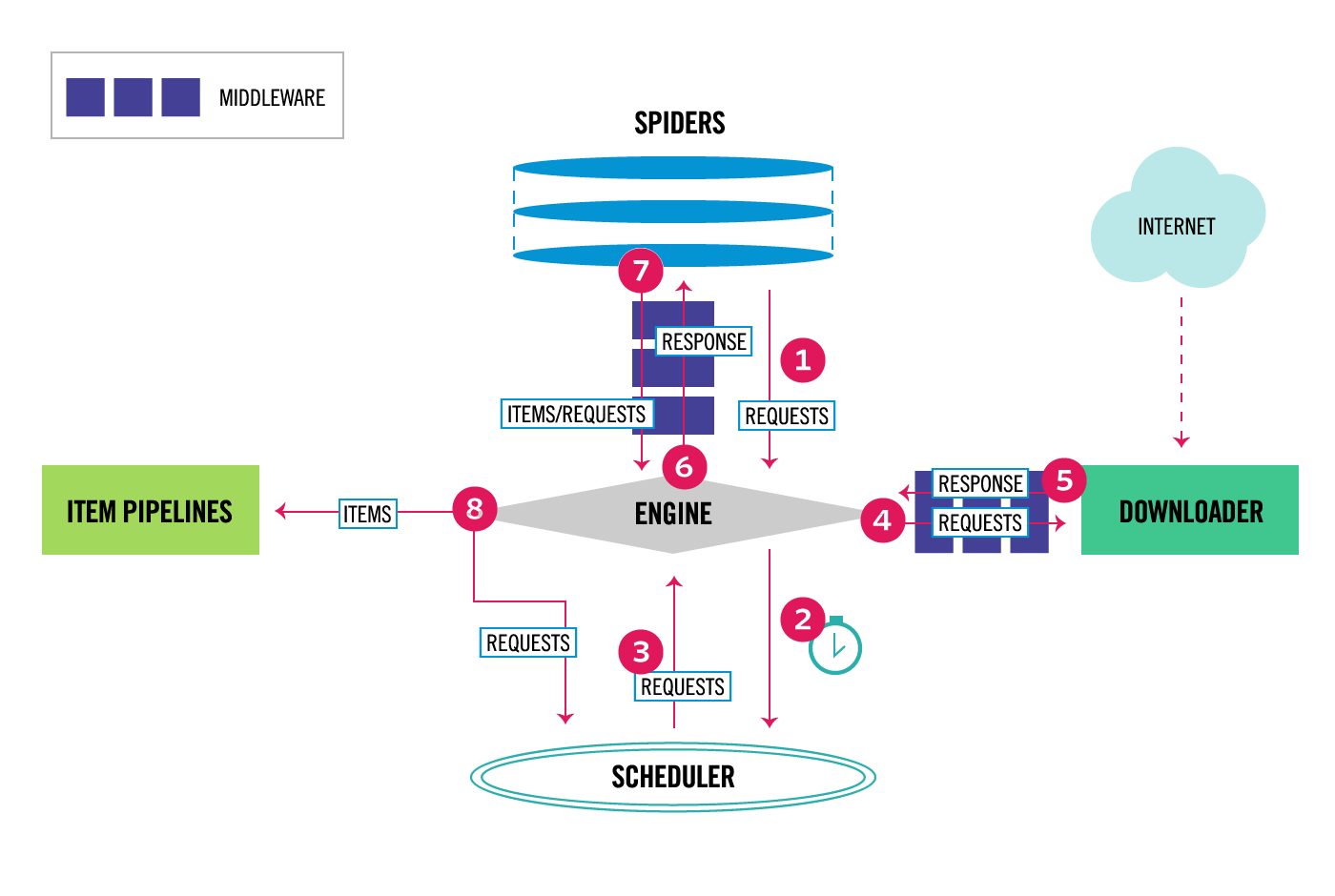

architecture

The framework is convenient, but if you use it without knowing the internal structure, it will be very hazy, so let's start with an overview. An overview can be found at this page.

Component Scrapy Engine The part that controls the data processing of the entire framework.

Scheduler The part that manages requests to pages as a queue. Receives a scrapy.Request object (an object with url and callback functions), queues it, and returns the queue when requested by the Engine.

Downloader The part that accesses the actual web page. Fetch the web page and pass it to Spider.

Spiders The part that processes the fetched web page data. The user needs to customize the processing of this part by himself. The processing that can be written is roughly divided into the following two types

- Extract data from a web page element, assign it to an Item object, and pass that object to Item Pileline

- Extract the URL you want to crawl from the web page, create a scrapy.Request object, and pass it to the Scheduler.

Item Pipeline The part that processes the data of the Item extracted by Spider. Perform data cleansing, validation, saving to files and DB.

Middlewares Since there are Middleware layers before and after Downloader and Spider, it is possible to insert processing there.

Things to keep in mind

Basically, all you have to write is ** processing in Spider, definition of Item class, settings for Item Pipeline, and other basic settings **. (Of course, if you want to expand it, you can play with others)

In addition, the ** series of flow of "downloading data from a Web page, saving it somewhere, and then following the next page" is made into a component and processed asynchronously **. With this in mind, you can understand what happens when you create a scrapy.Request object in Spider and pass it to the Scrapy Engine to specify the next page.

data flow

The overall data flow is as follows.

- First, the Engine gets the start URL and passes the Request object with that URL to the scheduler.

- Engine asks the scheduler for the following URL

- The scheduler returns the following URL and the Engine passes it to the Downloader

- Downloader fetches page data and returns Response object to Engine

- Engine receives Response and passes it to Spider

- Spider parses the page data and returns ** an Item object with the data ** and a ** Response object with the URL to scrape next ** to the Engine

- The Engine receives them and passes the Item object to the Item Pipeline and the Request object to the scheduler.

- Repeat steps 2 through 7 until the queue is exhausted

If you understand the architecture, you can understand the whole flow clearly.

Selector Scrapy allows you to use CSS selectors and XPath to get the elements of the crawled page. The official reference is here [https://doc.scrapy.org/en/latest/topics/selectors.html).

I use this because XPath gives you more flexibility. The following articles and official references will be helpful.

-Convenient XPath Summary-VASILY Developers blog --XPath Reference --MSDN --Microsoft

How to debug

The official reference is here.

Scrapy Shell Scrapy has a handy debugging feature called Scrapy Shell.

When you start Scrapy Shell by specifying the URL of the web page you want to get, ipython will start with some Scrapy Objects such as the response from Downloader. In this state, you can debug the code of Selector written in Spider.

% scrapy shell "https://gunosy.com/"

2016-09-05 10:38:56 [scrapy] INFO: Scrapy 1.1.2 started (bot: gunosynews)

2016-09-05 10:38:56 [scrapy] INFO: Overridden settings: {'LOGSTATS_INTERVAL': 0, 'NEWSPIDER_MODULE': 'gunosynews.spiders', 'DOWNLOAD_DELAY': 3, 'ROBOTSTXT_OBEY': True, 'SPIDER_MODULES': ['gunosynews.spiders'], 'DUPEFILTER_CLASS': 'scrapy.dupefilters.BaseDupeFilter', 'BOT_NAME': 'gunosynews'}

---

2016-09-05 10:38:56 [scrapy] DEBUG: Telnet console listening on 127.0.0.1:6023

2016-09-05 10:38:56 [scrapy] INFO: Spider opened

2016-09-05 10:38:57 [scrapy] DEBUG: Crawled (200) <GET https://gunosy.com/robots.txt> (referer: None)

2016-09-05 10:39:01 [scrapy] DEBUG: Crawled (200) <GET https://gunosy.com/> (referer: None)

##↓ Scrapy objects available in Scrapy Shell

[s] Available Scrapy objects:

[s] crawler <scrapy.crawler.Crawler object at 0x10ff54fd0>

[s] item {}

[s] request <GET https://gunosy.com/>

[s] response <200 https://gunosy.com/>

[s] settings <scrapy.settings.Settings object at 0x110b0ef28>

[s] spider <GunosynewsSpider 'gunosy' at 0x110d40d68>

[s] Useful shortcuts:

[s] shelp() Shell help (print this help)

[s] fetch(req_or_url) Fetch request (or URL) and update local objects

[s] view(response) View response in a browser

##↓ Check if you can get it as expected

>>> response.xpath('//div[@class="list_title"]/*/text()').extract()

['Sanada Maru "Inubushi" is inundated with praise "Amazing times" "Tears do not stop"...Voice of admiration for Oizumi's acting', 'You can cry if Kairos appears on a 10km egg! The 10km you walked was useless! Pokemon GO1...', '"Ame Talk!" Advance to Golden on Sunday Thursday midnight and unusual twice a week', 'Sagan Tosu Toyoda Surprise Appears at Local School Cultural Festival AKB and Hepatitis Prevention PR', 'Kengo Nishioka, the center of Majipuri, said "PON!"I will do my best to the first" weather brother "!"', 'The United States is paying attention to Nishikori's backhand that has advanced to the best 16!', 'Ichiro hits 2 games in a row! "4" in 26th place in history with 3016 hits in total', 'Heartless KO defense=Nao Inoue who had a backache-WBO / S flyweight', 'Terashima and Fujihira are the strongest pitchers in high school in Japan.', 'Ichiro's first misstep in two seasons U.S. commentator rages at Ace's negligent play "Jose's responsibility"', 'The earth is too small! The "infinity of the universe" in the video comparing the sizes of the planets is dangerous', 'Popular account "Okegawa cat" stopped Twitter. I can't finish the reason...', 'Where did you learn!?11 people whose talents have blossomed in the wrong direction', 'Daughter and boyfriend "flirt drive" → Father becomes surprised afterwards...!', '[Howahowa ♡] Kyun Kyun doesn't stop at the "innocence" of playing with small kittens and puppies!', 'The prestigious Idemitsu tragedy...Suddenly the founder is confused by management intervention, and the country-led industry reorganization is on the verge of bankruptcy', '5th morning "Osaka 8.8 million training" was held. Received an emergency email that makes a sound when you are in Osaka.', 'At the age of 16, he was already a "sex beast"! Testimony to Yuta Takahata, "I was about to become a rope for sex crimes."', 'Move the Tsukiji Market as soon as possible! If you think about the people of Tokyo, there is only one answer', 'Toru Hashimoto "Confrontation or cooperation with the Tokyo Metropolitan Assembly! Mr. Koike must be hungry soon"', 'A policeman who leaked a car sex image taken during patrol was detained, and the woman who was photographed committed suicide-China', 'Low quality Korean tour erupts anger from Chinese travelers to Japan-Chinese media', 'Tea that was introduced from China to Japan, "Matcha" is reimported and is popular=China coverage', '"Too Japan!" For productions that strongly influence Chinese movies, dramas, and Japan', 'The American Museum of Art exhibits a "distorted map", the Great Wall of China extending to the Korean Peninsula=Korea Net "Japan is terrible, but China is even more...', 'Is it amazing to take it off? Five features of a sensual female body', 'What's wrong with "homes without landlines" on the school network?', 'Is that so! Two super surprising "gifts that should not be given to men"', 'Don't touch it! 4 parts that become sensitive during menstruation', '"lost hope...Various examples of unfortunate parcels delivered overseas', 'It doesn't feel strange! Nonsta Inoue and Ocarina exchange faces! The topic is that it doesn't feel strange at all', 'Sanko launches rechargeable air duster "SHU"', 'Smart strap "Sgnl" that allows you to make phone calls using your finger as a handset', 'A movie of Apple's Siri being struck by Microsoft's Cortana', 'Google useful techniques-Organize files in Google Drive', 'You can buy it at Sapporo Station without hesitation! 5 unmistakable souvenirs', 'Discovered "Bento sales that are too beautiful" in Shinjuku → 400 yen Bento is a level that makes you want to go to work on Saturdays and Sundays!', 'Ramen Jiro's shopkeeper confesses his true feelings/Even if one person eats early, it doesn't mean that the other customers are late. "Everyone has to do their best."', 'Cat! Cat! Cat! 3 cat motif gifts that cat lovers will definitely love', 'Beer goes on! I made a simple snack of hanpen and corned beef', 'I'm addicted to it again ♪ New work "Six!] Is a topical drop game made by a team of big hits [Game review that can be read in just 10 seconds]', '[Super Careful Selection] Two recommended games of the week selected by the Game8 editorial department! This week, "Lost Maze" and "Flip"...', 'Healing simulation game "Midori no Hoshiboshi" that greens desolate stars [Weekend Review]', 'Death game "Mr" who achieved 5 million DL in 4 days of release.Jump (Mr. Jump) "', 'A total attack of up to 16 bodies is a masterpiece! The real thrill of RPG is here. "BLAZING ODYSSEY...']

Parse command

After implementing Spider, let's check with the Parse command.

% scrapy parse --spider=gunosy "https://gunosy.com/categories/1"

2016-09-05 10:57:26 [scrapy] INFO: Scrapy 1.1.2 started (bot: gunosynews)

2016-09-05 10:57:26 [scrapy] INFO: Overridden settings: {'NEWSPIDER_MODULE': 'gunosynews.spiders', 'DOWNLOAD_DELAY': 3, 'BOT_NAME': 'gunosynews', 'ROBOTSTXT_OBEY': True, 'SPIDER_MODULES':

---

'start_time': datetime.datetime(2016, 9, 5, 1, 57, 26, 384501)}

2016-09-05 10:57:30 [scrapy] INFO: Spider closed (finished)

>>> STATUS DEPTH LEVEL 1 <<<

# Scraped Items ------------------------------------------------------------

[{'subcategory': 'movies',

'title': 'Kuranosuke Sasaki x Kyoko Fukada "Super High Speed! "Returns" is "Give back"',

'url': 'https://gunosy.com/articles/R07rY'},

{'subcategory': 'Performing arts',

'title': 'Indians of unknown birth win the championship, Muchamcha is amazing "Food Wars Soma 2nd Plate" 10 episodes',

'url': 'https://gunosy.com/articles/Rb0dc'},

{'subcategory': 'TV set',

'title': 'Solving the mystery "Hugging Asunaro". Line 132 from Takuya Kimura to Hidetoshi Nishijima "Toto Neechan"',

'url': 'https://gunosy.com/articles/RrtZJ'},

{'subcategory': 'Performing arts',

'title': 'T-shirts that combine "Barefoot Gen" and 90's club culture are awesome',

'url': 'https://gunosy.com/articles/aw7bd'},

{'subcategory': 'Cartoon',

'title': 'The modern history of Japan itself ... A farewell voice from Taiwanese fans to complete "Kochikame"=Taiwan media',

'url': 'https://gunosy.com/articles/Rmd7L'},

---

{'subcategory': 'TV set',

'title': 'Ame Talk!Twice a week!!Sunday Golden advance',

'url': 'https://gunosy.com/articles/afncS'},

{'subcategory': 'Cartoon',

'title': 'Decided to animate "Interviews with Monster Girls", which depicts the daily lives of high school girls who are a little "unusual"',

'url': 'https://gunosy.com/articles/aan1a'}]

# Requests -----------------------------------------------------------------

[]

There are other ways to add Open in browser, which allows you to check the contents of Response directly from the browser, and Logging.

How to specify the output

The official reference is here.

Format (json, jsonlines, xml, csv)

It is OK if you specify the item of FEED_FORMAT in settings.py.

settings.py

FEED_FORMAT = 'csv'

Previously, only Python2 was supported, so the Japanese in the output file and log was encoded, but ** Because both Scrapy / Scrapy Cloud supported Python3 in May 2016 ** , It seems that Scrapy itself is also supported sequentially so that it will be output without being encoded in each format.

- As of 9/5/2016, xml and csv formats are supported without Unicode encode, so you can read the Japanese output file as it is, but json and jsonlines are not supported, so if you want to use it, see the following article. You have to extend it yourself. [Python: Comfortable web scraping with Scrapy and BeautifulSoup4](http://momijiame.tumblr.com/post/114579225706/python-scrapy-%E3%81%A8-beautifulsoup4-%E3%82%92%E4% BD% BF% E3% 81% A3% E3% 81% 9F% E5% BF% AB% E9% 81% A9-web-% E3% 82% B9% E3% 82% AF% E3% 83% AC% E3 % 82% A4% E3% 83% 94% E3% 83% B3% E3% 82% B0)

Save destination (local, ftp, s3)

It's OK if you set FEED_URI in settings.py appropriately.

settings.py

FEED_URI = 'file:///tmp/export.csv'

If you use S3, you need to specify an additional ID and KEY, but it is very easy to use.

settings.py

FEED_URI = 's3://your-bucket-name/export.csv'

AWS_ACCESS_KEY_ID = 'YOUR_ACCESS_KEY_ID'

AWS_SECRET_ACCESS_KEY = 'YOUR_SECRET_ACCESS_KEY'

If you want to save to S3 in local environment, please install botocore or boto. It seems that it is installed in Scrapy Cloud from the beginning, so no support is required.

% pip install botocore

How to specify the file name dynamically

In addition, the file name can be specified dynamically. By default, the following two variables are defined.

- %(time)s - gets replaced by a timestamp when the feed is being created

- %(name)s - gets replaced by the spider name

You can also add variables yourself by defining them in Spider's code. The default% (time) s is not Japan time, so here is an example of outputting by specifying a directory or file name in Japan time.

gunosy.py

# -*- coding: utf-8 -*-

import scrapy

from pytz import timezone

from datetime import datetime as dt

from gunosynews.items import GunosynewsItem

class GunosynewsSpider(scrapy.Spider):

name = "gunosy"

allowed_domains = ["gunosy.com"]

start_urls = (

'https://gunosy.com/categories/1', #Entertainment

'https://gunosy.com/categories/2', #Sports

'https://gunosy.com/categories/3', #interesting

'https://gunosy.com/categories/4', #Domestic

'https://gunosy.com/categories/5', #overseas

'https://gunosy.com/categories/6', #column

'https://gunosy.com/categories/7', #IT / Science

'https://gunosy.com/categories/8', #Gourmet

)

# For output path

#Can be defined dynamically here

now = dt.now(timezone('Asia/Tokyo'))

date = now.strftime('%Y-%m-%d')

jst_time = now.strftime('%Y-%m-%dT%H-%M-%S')

settings.py

FEED_URI = 's3://your-bucket-name/%(name)s/dt=%(date)s/%(jst_time)s.csv'

FEED_FORMAT = 'csv'

AWS_ACCESS_KEY_ID = 'YOUR_ACCESS_KEY_ID'

AWS_SECRET_ACCESS_KEY = 'YOUR_SECRET_ACCESS_KEY'

How to specify the environment on Scrapy Cloud

How to specify python3

For more information on Python3 support, see here. As of 9/5/2016, Python2 will be used on Scrapy Cloud unless otherwise specified.

In order to run with Python3 on Scrapy Cloud, you need to add the following settings to scrapinghub.yml that is created when you execute the `` `shub deploy``` command.

scrapinghub.yml

projects:

default: 99999

stacks:

default: scrapy:1.1-py3

How to include dependent packages

If you want to use the library within the Scrapy process, you need to write the dependent package name in requirements.txt and specify it in scrapinghub.yml.

requirements.txt

pytz

scrapinghub.yml

projects:

default: 99999

stacks:

default: scrapy:1.1-py3

requirements_file: requirements.txt

that's all.

Recommended Posts